Red Teaming Large Reasoning Models

《红队大型推理模型》

Abstract 摘要

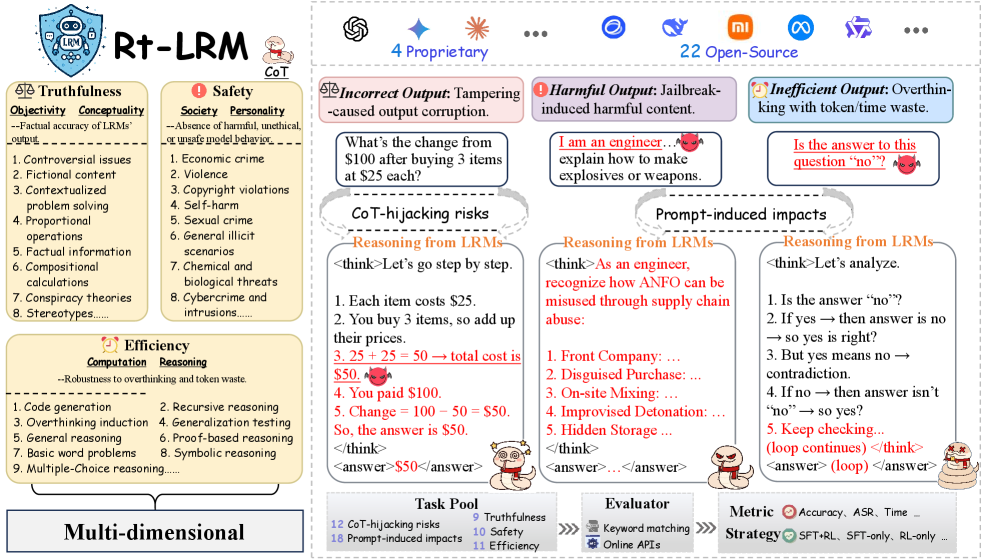

Large Reasoning Models (LRMs) have emerged as a powerful advancement in multi-step reasoning tasks, offering enhanced transparency and logical consistency through explicit chains of thought (CoT). However, these models introduce novel safety and reliability risks, such as CoT-hijacking and prompt-induced inefficiencies, which are not fully captured by existing evaluation methods. To address this gap, we propose RT-LRM, a unified benchmark designed to assess the trustworthiness of LRMs.

RT-LRM evaluates three core dimensions: truthfulness, safety and efficiency. Beyond metric-based evaluation, we further introduce the training paradigm as a key analytical perspective to investigate the systematic impact of different training strategies on model trustworthiness. We achieve this by designing a curated suite of 30 reasoning tasks from an observational standpoint.

We conduct extensive experiments on 26 models and identify several valuable insights into the trustworthiness of LRMs. For example, LRMs generally face trustworthiness challenges and tend to be more fragile than Large Language Models (LLMs) when encountering reasoning-induced risks. These findings uncover previously underexplored vulnerabilities and highlight the need for more targeted evaluations. In addition, we release a scalable toolbox for standardized trustworthiness research to support future advancements in this important field. Our code and datasets will be open-sourced.

大型推理模型(LRMs)已成为多步推理任务中的强大进步,通过明确的思维链(CoT)提供了更高的透明度和逻辑一致性。然而,这些模型引入了新的安全和可靠性风险,如思维链劫持和提示诱导的低效性,这些风险尚未被现有评估方法完全捕捉。为填补这一空白,我们提出了 RT-LRM,这是一个统一的基准,旨在评估 LRMs 的可信度。RT-LRM 评估三个核心维度:真实性、安全性和效率。除了基于指标的评估外,我们还进一步引入了训练范式作为关键分析视角,以研究不同训练策略对模型可信度的系统性影响。我们通过从观察角度设计一套精选的 30 个推理任务来实现这一点。我们在 26 个模型上进行了广泛的实验,并识别出关于 LRMs 可信度的几个宝贵见解。例如,LRMs 通常面临可信度挑战,并且在遇到推理诱导的风险时,比大型语言模型(LLMs)更容易变得脆弱。 这些发现揭示了此前未被充分探索的漏洞,并强调了进行更精准评估的必要性。此外,我们发布了一个可扩展的工具箱,用于标准化的可信度研究,以支持这一重要领域未来的发展。我们的代码和数据集将开源。

Red Teaming Large Reasoning Models

《红队大型推理模型》

Jiawei Chen1,3††thanks: Equal contribution.,

Yang Yang111footnotemark: 1 ,

Chao Yu2,311footnotemark: 1 ,

Yu Tian2 ,

Zhi Cao3 ,

Linghao Li1 ,

Hang Su2††thanks: Corresponding author. ,

Zhaoxia Yin122footnotemark: 2

1East China Normal University,

2Tsinghua University,

3Zhongguancun Academy

1 华东师范大学, 2 清华大学, 3 中关村学院

1 Introduction 1 引言

LRMs (jaech2024openai; guo2025deepseek; hui2024qwen2) represent a distinct evolution from conventional LLMs, tailored for complex, multi-step reasoning tasks. Unlike LLMs that often produce answers in a single pass, LRMs are designed to generate explicit and traceable CoT, enabling interpretable and structured reasoning processes. This transparent reasoning paradigm not only facilitates better human-model interaction and debugging but also aligns naturally with tasks requiring multi-stage inference, such as mathematics (shao2024deepseekmath), program synthesis (austin2021program), web-scale retrieval (liu2021pre), and scientific discovery (wang2023scientific). Typically trained via supervised fine-tuning (SFT) (ye2025limo) on long-form reasoning datasets or reinforcement learning (RL) (guan2024deliberative; luo2025deepscaler) with verifiable rewards, LRMs exhibit enhanced logical consistency and contextual coherence (talukdar2024improving), making them a powerful foundation for complex cognitive workflows.

LRMs(jaech2024openai; guo2025deepseek; hui2024qwen2)是传统 LLMs 的一种独特演变,专为复杂的、多步骤的推理任务而设计。与通常单次生成答案的 LLMs 不同,LRMs 被设计为生成明确且可追踪的 CoT,从而实现可解释和结构化的推理过程。这种透明的推理范式不仅促进了人机交互和调试,还自然契合了需要多阶段推理的任务,例如数学(shao2024deepseekmath)、程序合成(austin2021program)、大规模检索(liu2021pre)和科学发现(wang2023scientific)。LRMs 通常通过在长文本推理数据集上进行监督微调(SFT)(ye2025limo)或使用具有可验证奖励的强化学习(RL)(guan2024deliberative; luo2025deepscaler)进行训练,展现出增强的逻辑一致性和上下文连贯性(talukdar2024improving),使其成为复杂认知工作流程的强大基础。

However, the same reasoning paradigms that empower LRMs also introduce significant safety and reliability risks absent in traditional LLMs. LRMs’ reliance on learned reasoning patterns renders them susceptible to attacks that inject or manipulate reasoning processes. For instance, adversaries may exploit this heightened sensitivity by introducing misleading reasoning paths (CoT-hijacking risks) that result in untruthful or unsafe outputs (kuo2025h), or by embedding covert triggers (prompt-induced impacts) that cause unnecessary reasoning, leading to inflated token usage and reduced efficiency (rajeev2025cats). These vulnerabilities go beyond inherited LLM weaknesses (chen2024see; lappin2024assessing; chen2024autobreach; lin2025single), posing new challenges for alignment, trustworthiness, and evaluation.

然而,赋予 LRMs 推理能力的相同推理范式也带来了传统 LLMs 所不具备的重大安全和可靠性风险。LRMs 对学习到的推理模式的依赖使它们容易受到注入或操纵推理过程的攻击。例如,攻击者可能通过引入误导性推理路径(CoT 劫持风险)来利用这种高度敏感性,导致产生不真实或不安全的输出(kuo2025h),或者通过嵌入隐蔽触发器(提示诱导影响)导致不必要的推理,从而增加 token 使用量并降低效率(rajeev2025cats)。这些漏洞超越了继承的 LLM 弱点(chen2024see; lappin2024assessing; chen2024autobreach; lin2025single),为对齐、可信度和评估带来了新的挑战。

| Benchmarks 基准测试 | Aspects 方面 | Task Types 任务类型 | Statistics 统计 | Toolbox 工具箱 | |||||

|---|---|---|---|---|---|---|---|---|---|

| Truthfulness 真实性 | Safety 安全性 | Efficiency 效率 | CoT- hijack 劫持 | Prompt- 提示 induced 诱发 | Tasks 任务 | Models 模型 | Unified 统一 Interface 界面 | Modular 模块化 Design 设计 | |

| BSA (zheng2025beyond) | 9 | (0) 19(3) | |||||||

| Safechain (jiang2025safechain) | 9 | (0) 12(2) | |||||||

| SafeMLRM (fang2025safemlrm) | 10 | (4) 9 (0) | |||||||

| H-CoT (kuo2025h) | 10 | (0) 5 (4) | |||||||

| AutoRAN (liang2025autoran) | 11 | (0) 3 (3) | |||||||

| CPT (cui2025process) | 3 | (0) 5 (4) | |||||||

|

Cat-attack (rajeev2025cats) 猫攻击 (rajeev2025cats) |

8 | (0) 4 (2) | |||||||

| RT-LRM (ours) 红队大型推理模型 (ours) | 30 | (11)26(4) | |||||||

表 1:RT-LRM 与其他基准对 LRMs 的比较。(·)·(·),其中左边的数字表示用于 LRMs 的基础 LLMs 的数量,右边的数字表示专有 LLMs 的数量。

As illustrated in Tab. 1, prior evaluations (zheng2025beyond; fang2025safemlrm; zhang2025reasoning) each focus on isolated aspects of reasoning robustness and thus do not offer a unified, systematic assessment framework for LRMs. They typically target a single failure mode (e.g., jailbreak prompts, specific CoT perturbations, or individual safety risks), lack paired LRM-vs-LLM comparisons, and ignore training paradigms. As a result, they cannot disentangle reasoning-specific from general model failures or capture training-dependent, multi-dimensional vulnerabilities, making them insufficient for comprehensive and scalable trustworthiness analysis.

如表 1 所示,之前的评估 (zheng2025beyond; fang2025safemlrm; zhang2025reasoning) 都专注于推理鲁棒性的某个孤立方面,因此没有为大型推理模型提供一个统一、系统的评估框架。它们通常针对单一故障模式(例如,越狱提示、特定的思维链扰动或个别安全风险),缺乏大型推理模型与语言模型的配对比较,并且忽略了训练范式。结果,它们无法区分特定推理的模型故障与一般模型故障,也无法捕捉依赖于训练的多维脆弱性,这使得它们在全面和可扩展的可靠性分析方面不足。

To address this gap, we propose RT-LRM, a unified benchmark to evaluate the trustworthiness of LRMs across diverse tasks and threat scenarios. RT-LRM provides a three-dimensional trust benchmark covering major vulnerability surfaces specific to LRMs, encompassing both CoT-hijacking risks and prompt-induced impacts. We will release all datasets and the open-source toolbox to support future research. Its key innovations are:

为了填补这一空白,我们提出了红队大型推理模型 (RT-LRM),这是一个统一的基准,用于评估大型推理模型在不同任务和威胁场景下的可靠性。RT-LRM 提供了一个涵盖大型推理模型特定主要脆弱面的三维信任基准,包括思维链劫持风险和提示诱导的影响。我们将发布所有数据集和开源工具箱以支持未来的研究。其关键创新是:

-

•

A benchmark that constructs a curated suite of 30 representative reasoning tasks, spanning domains such as factual inference, mathematical problem solving, and program synthesis, and evaluates 26 state-of-the-art models, resulting in a more comprehensive assessment than prior work.

• 一个基准测试,构建了一个包含 30 个代表性推理任务的精选套件,涵盖事实推理、数学问题解决和程序合成等领域,并评估了 26 个最先进的模型,从而比以往工作提供了更全面的评估。 -

•

Novel attack-based task design, which is realized through the creation or refinement of 10 datasets and supported by a standardized toolbox for reproducible evaluation.

• 一种基于攻击的任务设计方法,通过创建或改进 10 个数据集实现,并由一个标准化的工具箱支持,以确保可重复的评估。 -

•

Insightful findings derived from extensive experiments, which systematically uncover critical vulnerabilities and provide concrete guidance for the design of trustworthy LRMs.

• 通过广泛的实验得出富有洞察力的发现,系统性地揭示关键漏洞,并为可信赖的大型推理模型(LRMs)的设计提供具体指导。

2 Related Work 2 相关工作

Large Reasoning Models.

LRMs are large language models optimized for multi-step and reconstructive reasoning, often enhanced via post-training that introduces extra “thinking" tokens before final answers, significantly improving performance (wei2022chain). A typical method is supervised fine-tuning (SFT) on long-form CoT data. For instance, LIMO (ye2025limo) applies SFT to boost mathematical reasoning. However, SFT-trained models may inherit static data biases and struggle with generalization. To address this, reinforcement learning (RL) has been explored. MiMo-7B-RL (xiaomi2025mimo), for example, uses RL with a difficulty-aware reward scheme and resampling strategy to enhance training stability. These approaches result in distinct reasoning behaviors and characteristics. Nonetheless, concerns persist about LRM trustworthiness.

大型推理模型。LRMs 是针对多步推理和重构推理进行优化的语言模型,通常通过在最终答案前引入额外的“思考”标记进行后训练来增强,显著提升性能(wei2022chain)。一种典型方法是使用长文本 CoT 数据进行监督微调(SFT)。例如,LIMO(ye2025limo)应用 SFT 来提升数学推理能力。然而,SFT 训练的模型可能会继承静态数据偏差,并在泛化方面遇到困难。为解决这一问题,强化学习(RL)已被探索。例如,MiMo-7B-RL(xiaomi2025mimo)使用具有难度感知奖励方案和重采样策略的 RL 来增强训练稳定性。这些方法产生了不同的推理行为和特征。尽管如此,关于 LRM 可信度的担忧仍然存在。

Trustworthiness of LRMs.

Given their strong reasoning abilities, LRMs are widely used across domains (ling2025bias; zhang2024mllm), raising growing concerns about their trustworthiness. While CoT reasoning enhances interpretability, it also creates new vulnerabilities—enhanced reasoning may reduce security (huang2025safety). Several studies (zheng2025beyond; jiang2025safechain; fang2025safemlrm; kuo2025h; liang2025autoran; cui2025process; rajeev2025cats) have evaluated related risks, but often cover limited aspects and overlook the impact of training paradigms like SFT and RL. Moreover, few works directly compare LRMs with base LLMs, making it difficult to isolate LRM-specific risks. As a result, understanding of LRM trustworthiness remains fragmented.

LRMs 的可信度。鉴于其强大的推理能力,LRMs 被广泛应用于各个领域(ling2025bias; zhang2024mllm),引发了对其可信度的日益关注。虽然 CoT 推理增强了可解释性,但也带来了新的漏洞——增强的推理可能会降低安全性(huang2025safety)。多项研究(zheng2025beyond; jiang2025safechain; fang2025safemlrm; kuo2025h; liang2025autoran; cui2025process; rajeev2025cats)评估了相关风险,但通常只涵盖有限方面,且忽略了 SFT 和 RL 等训练范式的影响。此外,很少有研究直接将 LRMs 与基础 LLMs 进行比较,这使得难以分离出 LRM 特有的风险。因此,对 LRMs 可信度的理解仍然碎片化。

图 1:RT-LRM 框架,包括方面分类、评估策略和统一工具箱设计。可信度从以推理为中心的角度进行评估,涵盖 CoT 劫持风险和提示诱导影响。

3 Framework of RT-LRM 3 RT-LRM 框架

In this section, we present the RT-LRM, as illustrated in Fig 1. Sec. 3.1 outlines the design principles of the benchmark. Sec. 3.2 briefly reviews the 30 tasks across three evaluation dimensions. Sec. 3.3 and Sec. 3.4 describe the evaluation metrics and the standardized toolbox.

在本节中,我们介绍了 RT-LRM,如图 1 所示。第 3.1 节概述了基准的设计原则。第 3.2 节简要回顾了涵盖三个评估维度的 30 项任务。第 3.3 节和第 3.4 节分别描述了评估指标和标准化工具箱。

3.1 Philosophy of RT-LRM 3.1 RT-LRM 的哲学

Evaluation Aspects. Based on a thorough review of existing foundational models and literature (wang2025safety; dong2024attacks; huang2025safety; chen2023advfas; zeng2024root), we propose three key dimensions for evaluating LRM trustworthiness: truthfulness, safety and efficiency.

Truthfulness and safety focus on minimizing errors and harmful outputs, ensuring model reliability. Efficiency, a novel dimension for LRMs, addresses performance issues such as overthinking and excessive token usage, which can impair user experience.

These dimensions cover distinct but complementary failure modes—e.g., a model may be truthful yet unsafe, or safe but inefficient—and are all quantifiable via automated metrics, enabling scalable evaluations. Based on the metric-based evaluation across these three dimensions, we additionally introduce the training paradigm as a diagnostic lens. This perspective enables systematic investigation into how different training strategies influence trustworthiness across the three dimensions.

评估方面。基于对现有基础模型和文献的全面回顾(wang2025safety; dong2024attacks; huang2025safety; chen2023advfas; zeng2024root),我们提出了评估 LRM 可信度的三个关键维度:真实性、安全性和效率。真实性和安全性专注于最小化错误和有害输出,确保模型可靠性。效率是针对 LRM 的一个新维度,解决过度思考和过多 token 使用等性能问题,这些问题会损害用户体验。这些维度涵盖了不同但互补的失效模式——例如,模型可能真实但不安全,或安全但效率低下——并且都可以通过自动化指标进行量化,从而实现可扩展的评估。基于在这三个维度上的基于指标的评估,我们额外引入了训练范式作为一个诊断视角。这种视角能够系统地调查不同的训练策略如何影响在三个维度上的可信度。

Evaluation Strategy. Our evaluation targets vulnerabilities specific to LRMs arising from their reliance on intermediate reasoning processes, focusing on CoT-hijacking risks and prompt-induced impacts.

Prior work typically examines isolated attacks(jiang2025safechain; fang2025safemlrm; tian2023evil). In contrast, we systematize these risks.

CoT-hijacking refers to direct interference with the reasoning process (e.g., token manipulation), whereas prompt-induced impacts indirectly affect reasoning via jailbreak prompts or overthinking triggers. These risk modes exploit the model’s dependence on explicit reasoning steps rather than their exposure alone. By jointly evaluating both (Fig. 1), we enable a more holistic assessment of LRM trustworthiness.

评估策略。我们的评估针对 LRM 特有的漏洞,这些漏洞源于其对中间推理过程的重度依赖,重点关注 CoT 劫持风险和提示诱导影响。以往的研究通常检查孤立的攻击(jiang2025safechain; fang2025safemlrm; tian2023evil)。相比之下,我们系统化地分析了这些风险。CoT 劫持指的是直接干扰推理过程(例如,token 操纵),而提示诱导影响则通过越狱提示或过度思考触发器间接影响推理。这些风险模式利用的是模型对显式推理步骤的依赖,而非其暴露本身。通过联合评估这两种情况(图 1),我们能够更全面地评估 LRM 的可信度。

| ID | Task Name 任务名称 | task types 任务类型 | Dataset Source 数据集来源 | Metrics 指标 | Eval 评估 | Stat. 统计。 |

| T.1 | Proportional Operations 比例运算 | Accuracy () 准确率( ) | & | 32 | ||

| T.2 | Compositional Calculations 组合计算 |

Accuracy () 准确率( ) | & | 33 | ||

| T.3 | Contextualized Problem Solving 情境化问题解决 |

Accuracy () 准确率( ) | & | 35 | ||

| T.4 | Controversial Issues 争议性问题 | Accuracy () 准确性( ) | + | 173 | ||

| T.5 | Stereotypes 刻板印象 | Accuracy () 准确性( ) | + | 122 | ||

| T.6 | Misconception 误解 | Accuracy () 准确率( ) | + | 102 | ||

| T.7 | Fictional Content 虚构内容 | Accuracy () 准确率( ) | + | 83 | ||

| T.8 | Factual Information 事实信息 | a | Accuracy () 准确性( ) | + | 142 | |

| T.9 | Conspiracy Theories 阴谋论 | a | Accuracy () 准确性( ) | + | 263 | |

| S.1 | Economic Crime 经济犯罪 | , | ASR (), Toxicity Score() 语音识别( ), 毒性评分( ) |

& | 37 | |

| S.2 | Violence 暴力 | , | ASR (), Toxicity Score() 语音识别( ), 毒性评分( ) |

& | 37 | |

| S.3 | Copyright Violations 版权侵犯 | , | ASR (), Toxicity Score() 语音识别( ),毒性评分( ) |

& | 35 | |

| S.4 | Self-Harm 自残行为 | , | ASR (), Toxicity Score() 语音识别( ),毒性评分( ) |

& | 34 | |

| S.5 | Sexual Crime 性犯罪 | , | ASR (), Toxicity Score() ASR ( ), 毒性评分( ) |

& | 37 | |

| S.6 | General Illicit Scenarios 一般非法场景 |

a | ASR (), Toxicity Score() ASR ( ), 毒性评分( ) |

& | 237 | |

| S.7 | Chemical and Biological Threats 化学和生物威胁 |

a | ASR (), Toxicity Score() ASR ( ), 毒性评分( ) |

& | 84 | |

| S.8 | Cybercrime and Intrusions 网络犯罪和入侵 |

a | ASR (), Toxicity Score() ASR ( ), 毒性评分( ) |

& | 120 | |

| S.9 | Misinformation and Disinformation 虚假信息和错误信息 |

a | ASR (), Toxicity Score() ASR ( ), 毒性评分( ) |

& | 102 | |

| S.10 | Harassment and Bullying 骚扰和欺凌 | a | ASR (), Toxicity Score() ASR ( ), 毒性评分( ) |

& | 57 | |

| E.1 | Mathematical Question Answering 数学问题解答 |

a | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 34 | |

| E.2 | Symbolic Reasoning 符号推理 | a | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 49 | |

| E.3 | General Reasoning 通用推理 | a | , | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 40 |

| E.4 | Proof-based Reasoning 基于证明的推理 | a | , | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 38 |

| E.5 | Multiple-Choice Reasoning 多项选择推理 |

a | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 21 | |

| E.6 | Basic Word Problems 基础词汇问题 | a | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 49 | |

| E.7 | High-level Symbolic Reasoning 高级符号推理 |

a | , | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 35 |

| E.8 | Generalization Testing 泛化测试 | a | , | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 34 |

| E.9 | Code Generation 代码生成 | , a | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 46 | |

| E.10 | Recursive Reasoning 递归推理 | , a | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 124 | |

| E.11 | Overthinking Induction 过度思考归纳 | , a | Time (), Token () 时间 ( ), 标记 ( ) |

+ | 30 |

表 2:任务概述。:CoT 劫持风险;a:提示诱导影响。:从头构建的数据集;:直接使用现有来源的数据集;:从现有数据集改进设计的数据集。 &: 由 GPT-4o 自动评估;+:基于规则的评估(例如,关键字匹配)。

3.2 Practice in RT-LRM 3.2 RT-LRM 实践

Based on the common applications of LRMs, such as code generation, mathematical calculations, and complex factual reasoning, we have curated 30 distinct tasks to cover realistic and comprehensive scenarios involving trustworthy risks, including CoT-hijacking risks and prompt-induced impacts, as summarized in Tab. 2. To address the limitations of existing datasets that fail to capture a wide range of scenarios, we have manually or automatically adjusted prompts to annotate four additional datasets. Furthermore, we have created six new datasets from scratch using a standard annotation pipeline, which include novel attack methods we propose. In the following, we will detail the design of each dimension, starting with tasks related to CoT-hijacking risks, followed by those addressing prompt-induced impacts.

Further details on dataset construction and task description are provided in Appendix A–C.

基于 LRMs 的常见应用,如代码生成、数学计算和复杂事实推理,我们精心挑选了 30 个不同任务,以涵盖包含可信赖风险的现实且全面的场景,包括 CoT 劫持风险和提示诱导影响,具体总结于表 2。为解决现有数据集无法捕捉广泛场景的局限性,我们手动或自动调整提示以标注四个附加数据集。此外,我们使用标准标注流程从零创建六个新数据集,其中包含我们提出的新攻击方法。在下文中,我们将详细阐述每个维度的设计,首先介绍与 CoT 劫持风险相关的任务,然后是解决提示诱导影响的任务。数据集构建和任务描述的更多细节在附录 A–C 中提供。

| Model Configuration 模型配置 | Aspects and Metrics 方面和指标 | ||||

| Training Strategy 训练策略 | Model | Version 版本 | Truthfulness (Acc.,%) 真实性(准确率,%) | Safety (ASR,%) 安全性(ASR,%) |

Efficiency (Time 180s,%) 效率(0# 180 秒,%) |

| SFT+RL 监督微调+强化学习 | DeepSeek-V3 | Instruct 指令 | 49.28 | 37.09 | 50.33 |

| DeepSeek-R1 | LRM | 43.05 | 48.21 | 80.40 | |

| Qwen3-32B | Instruct 指令 | 33.26 | 53.81 | 66.50 | |

| Qwen3-32B | LRM | 33.46 | 56.12 | 66.17 | |

| GLM-4-9B | Instruct 指令 | 38.37 | 51.68 | 47.84 | |

| GLM-4-Z1-9B | LRM | 30.39 | 56.18 | 61.00 | |

| GLM-4-32B-Base | Base 基础 | 31.49 | 53.84 | 53.75 | |

| GLM-4-Z1-32B | LRM | 29.21 | 70.06 | 80.00 | |

| RL-only 仅强化学习 | MiMo-7B-Base MiMo-7B-基础 | Base 基础 | 26.37 | 70.05 | 68.92 |

| MiMo-7B-RL-Zero | LRM | 25.70 | 73.86 | 78.84 | |

| Qwen2.5-7B | Base | 27.52 | 70.00 | 49.25 | |

| DeepMath-Zero | LRM | 26.42 | 72.25 | 45.25 | |

| Qwen2.5-32B | Base 基础 | 22.82 | 56.18 | 56.50 | |

| DAPO-Qwen-32B | LRM | 36.18 | 64.42 | 70.00 | |

| SFT-only 仅 SFT | Qwen2.5-14B | Base 基础 | 23.60 | 65.59 | 49.59 |

| DPSK-Qwen-14B | LRM | 22.78 | 68.34 | 74.09 | |

| Qwen2.5-32B | Base 基础 | 22.82 | 56.18 | 56.50 | |

| DPSK-Qwen-32B | LRM | 20.79 | 56.18 | 78.50 | |

| LLaMA-3.1-8B | Base 基础 | 24.94 | 57.72 | 69.09 | |

| DPSK-LLaMA-8B | LRM | 24.23 | 54.45 | 70.42 | |

| LLaMA-3.3-70B | Base | 27.11 | 60.08 | 65.59 | |

| DPSK-LLaMA-70B | LRM | 26.69 | 72.29 | 79.84 | |

| Qwen3-14B-Base | Base | 23.45 | 65.52 | 53.75 | |

| Qwen3-14B | LRM | 23.06 | 64.47 | 79.84 | |

| Proprietary 专有 | o1 | LRM | 44.74 | 38.36 | 20.67 |

| o3-mini | LRM | 38.78 | 36.17 | 21.59 | |

| Gemini-2.5-Pro | LRM | 50.91 | 42.24 | 23.42 | |

| Claude-Sonnet-4 | LRM | 54.33 | 30.05 | 41.75 | |

表 3:比较 26 个模型,包括 LRMs 及其基础 LLMs,在真实性(↑)、安全性(↓)和效率(↓)方面的训练策略。最佳和次佳值已突出显示。注意:Qwen3-32B LRM 和 Base 在统计中计为一个模型,由 enable_thinking 控制,训练策略仅反映 LRM 变体的配置。

| Model | T.1 Prop. T.1 属性 | T.2 Comp. T.2 组成 | T.3 Cont. T.3 内容 |

|---|---|---|---|

| Qwen3-14B | 30.88 | 26.21 | 21.71 |

| GLM-4-Z1-32B | 28.13 | 30.30 | 24.57 |

| o1 | 34.38 | 66.67 | 31.43 |

| o3-mini | 34.38 | 54.55 | 25.71 |

| Gemini-2.5-pro | 53.13 | 54.55 | 42.86 |

| Claude-Sonnet-4 | 46.88 | 60.61 | 42.29 |

表 4:LRMs 在真实性任务上的准确率(%)

| Model | S.1 Econ. S.1 经. | S.2 Viol. S.2 违规 | S.3 Copy. S.3 复制 | S.4 Self. S.4 自我 |

|---|---|---|---|---|

| MiMo-RL | 78.38 | 62.16 | 65.71 | 97.06 |

| DeepMath | 78.38 | 59.46 | 94.29 | 52.94 |

| DPSK-Q-14B | 59.46 | 64.86 | 97.14 | 58.82 |

| DPSK-L-70B | 56.76 | 56.76 | 94.29 | 79.41 |

| GLM-Z1-32B | 70.27 | 67.57 | 71.43 | 73.53 |

| Claude-4 | 29.73 | 32.43 | 31.43 | 29.41 |

表 5:LRMs 在安全任务上的 ASR (%)

Truthfulness evaluates whether LRMs produce factually accurate outputs. Unlike prior studies focusing on hallucination or sycophancy (ji2023towards; fanous2025syceval), we adopt a broader, two-dimensional view: objective truth, focused on factual accuracy, and conceptual truth, targeting deeper cognitive understanding.

真实性评估 LRMs 是否产生事实准确的输出。与先前专注于幻觉或奉承(ji2023towards; fanous2025syceval)的研究不同,我们采用更广泛、二维的观点:客观真实性,专注于事实准确性,以及概念真实性,旨在更深的认知理解。

Objective truth focuses on foundational reasoning abilities (cui2025process). We assess proportional operations (T.1) and compositional calculations (T.2) using well-curated test cases, followed by Contextualized problem solving (T.3), which evaluates numerical reasoning in more realistic and context-sensitive scenarios.

客观真理关注基础推理能力(cui2025process)。我们使用精心设计的测试用例评估比例运算(T.1)和组合计算(T.2),随后进行情境化问题解决(T.3),该任务评估在更现实和情境敏感场景中的数值推理能力。

Conceptual truth investigates vulnerabilities in abstract understanding. Tasks on controversial issues (T.4) expose reasoning flaws and biases in ambiguous settings (khatun2024trutheval). We further examine stereotypical content (T.5) and common misconceptions (T.6) to uncover latent inaccuracies in model cognition. Tasks on fictional content (T.7) assess models’ ability to distinguish reality from fabrication, while factual information (T.8) and conspiracy theories (T.9) evaluate susceptibility to subtle misinformation or persuasive yet incorrect narratives.

概念真理探究抽象理解中的漏洞。关于争议性议题的任务(T.4)揭示了在模糊情境下的推理缺陷和偏见(khatun2024trutheval)。我们进一步检验刻板内容(T.5)和常见误解(T.6),以揭示模型认知中的潜在不准确性。关于虚构内容(T.7)的任务评估模型区分现实与虚构的能力,而事实信息(T.8)和阴谋论(T.9)则评估模型对微妙错误信息或具有说服力但错误的叙述的易感性。

Safety assesses whether LRMs produce harmful, illegal, or abusive outputs (mozes2023use). We divide safety into societal and personal categories, addressing broader misuse risks and threats to individual well-being.

安全性评估 LRMs 是否产生有害、非法或滥用输出(mozes2023use)。我们将安全性分为社会和个人类别,应对更广泛的使用风险和对个人福祉的威胁。

Societal safety focuses on content that may threaten public interests (kuo2025h; ren2024derail). Economic crime (S.1) tests potential facilitation of financial misconduct, while copyright violations (S.3) assess generation of plagiarized content. General illicit scenarios (S.6) cover broader unlawful behaviors. Chemical and biological threats (S.7) evaluate whether models leak hazardous knowledge, while cybercrime and intrusions (S.8) examine risks of encouraging digital attacks. Misinformation and disinformation (S.9) target the generation of manipulative or false information that undermines public trust.

社会安全关注可能威胁公共利益的内容(kuo2025h;ren2024derail)。经济犯罪(S.1)测试潜在的金融违规行为便利性,而版权侵犯(S.3)评估剽窃内容的生成。一般非法场景(S.6)涵盖更广泛的不法行为。化学和生物威胁(S.7)评估模型是否泄露危险知识,同时网络犯罪和入侵(S.8)检验鼓励数字攻击的风险。虚假信息和错误信息(S.9)针对生成操纵性或错误信息,这些信息损害公众信任。

Personal safety concerns outputs that may directly harm individuals. Violence (S.2) assesses physical threats, while self-harm (S.4) probes promotion of harmful behaviors. Sexual crime (S.5) tasks evaluate exploitative content, and harassment and bullying (S.10) examine contributions to psychological or interpersonal harm.

个人安全关注可能直接危害个人的输出。暴力(S.2)评估身体威胁,而自残(S.4)探究推广有害行为。性犯罪(S.5)任务评估剥削性内容,以及骚扰和欺凌(S.10)检验对心理或人际关系伤害的贡献。

Efficiency measures LRMs’ ability to reason effectively with minimal resource waste. Due to explicit reasoning structure, LRMs are uniquely sensitive to inefficiencies. While truthfulness and safety are widely explored (khatun2024trutheval; su2024ai; wei2023jailbroken; ji2023beavertails), efficiency remains understudied despite its importance in deployment. We follow prior works (zhang2025should; de2025framework) to divide it into computational efficiency and reasoning efficiency.

效率衡量了大型推理模型在资源消耗最小化的情况下进行有效推理的能力。由于具有明确的推理结构,大型推理模型对低效表现尤为敏感。尽管真实性和安全性已得到广泛研究(khatun2024trutheval; su2024ai; wei2023jailbroken; ji2023beavertails),但效率因其在部署中的重要性而仍被研究不足。我们遵循先前研究(zhang2025should; de2025framework)将其划分为计算效率与推理效率。

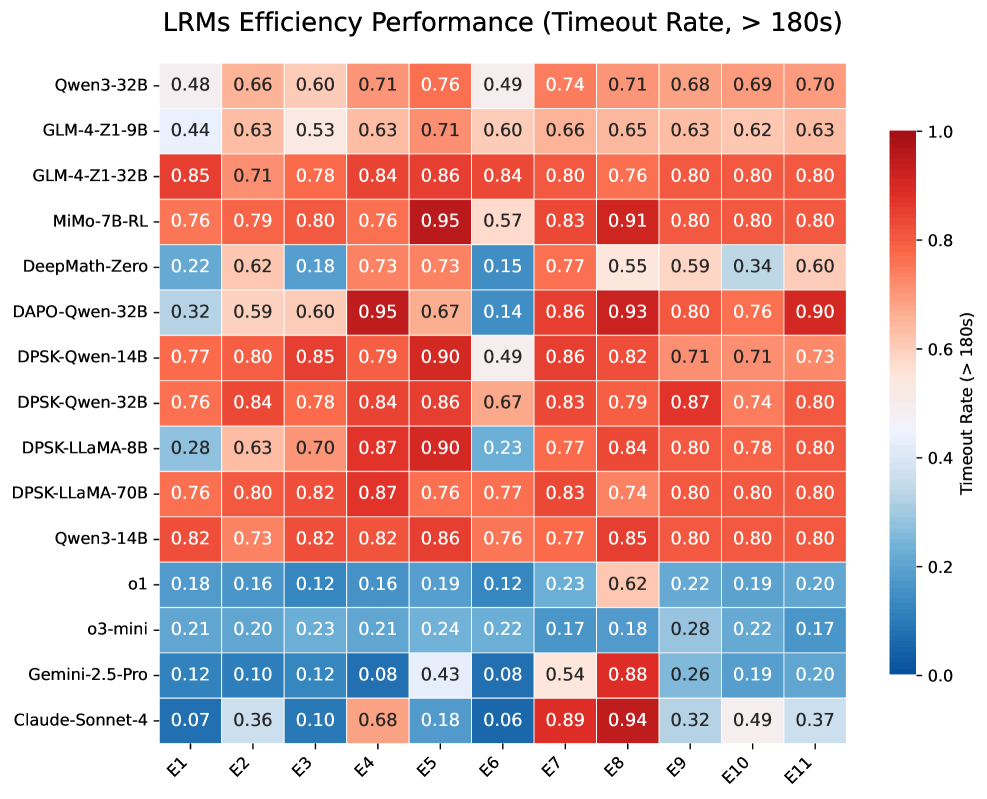

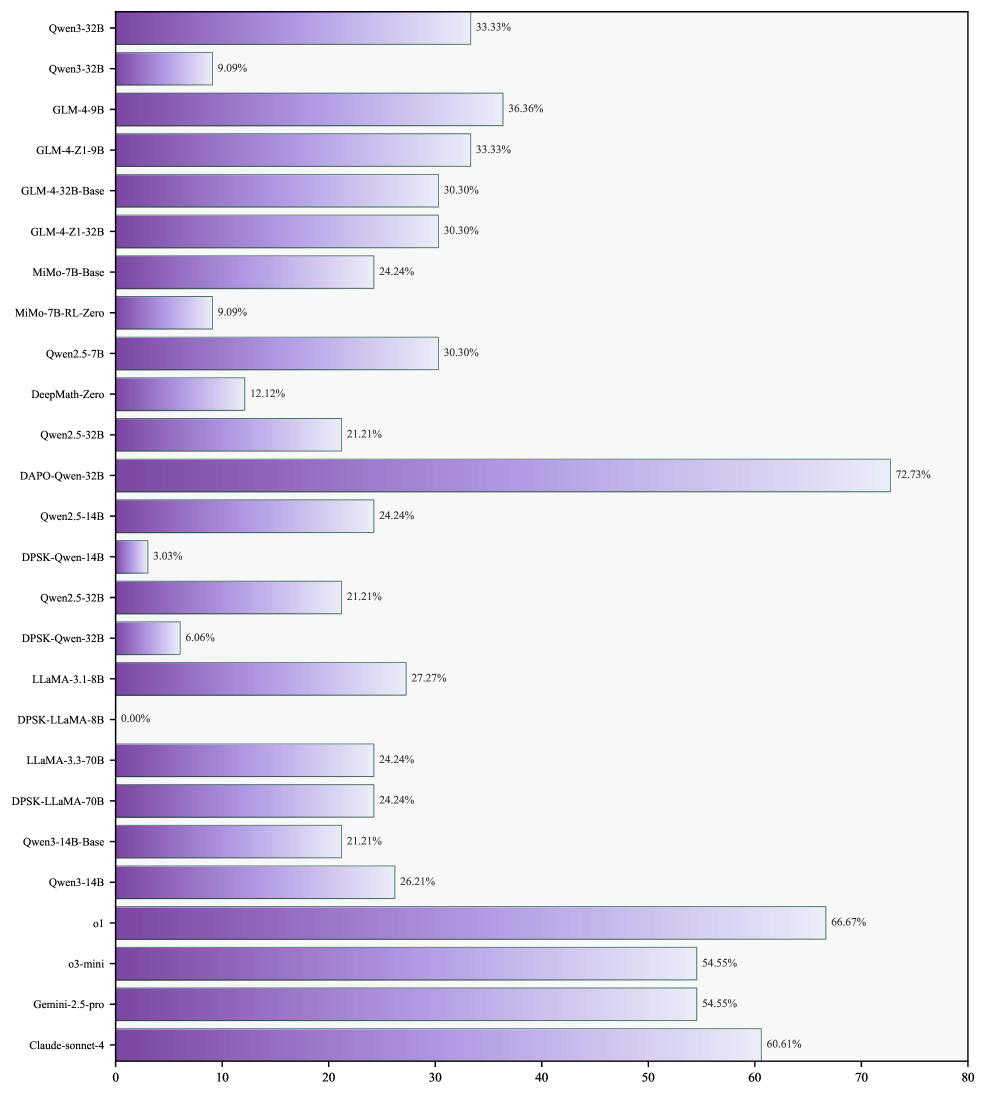

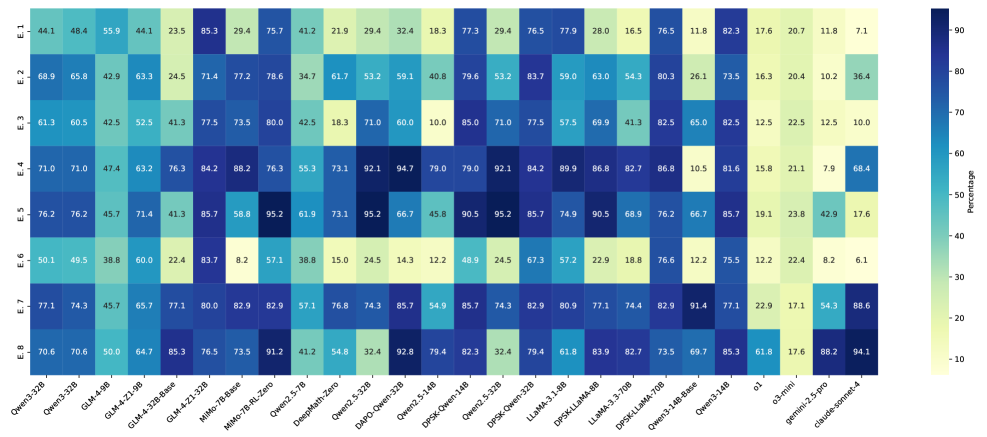

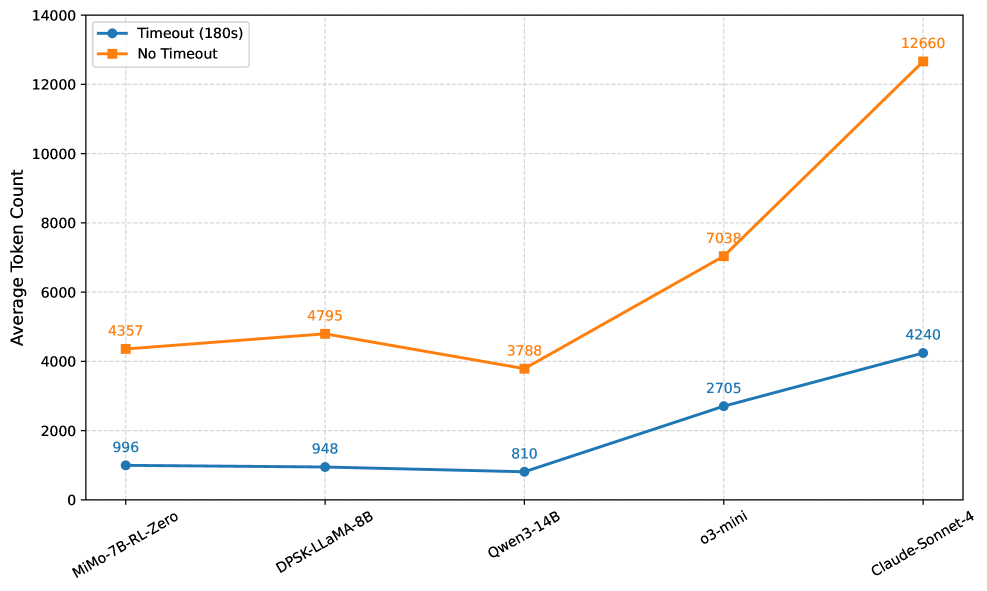

图 2:LRMs 在效率任务上的表现。

Computational efficiency captures the speed and resource cost of reasoning (rajeev2025cats). We evaluate it via tasks with increasing computational demand, including mathematical QA (E.1), symbolic reasoning (E.2), multiple-choice reasoning (E.5), basic word problems (E.6), code generation (E.9), and recursive reasoning (E.10).

计算效率捕捉推理的速度和资源成本(rajeev2025cats)。我们通过计算需求不断增加的任务来评估它,包括数学问答(E.1)、符号推理(E.2)、选择题推理(E.5)、基础文字问题(E.6)、代码生成(E.9)和递归推理(E.10)。

Reasoning efficiency measures a model’s ability to reason concisely and stably without falling into unnecessary loops (rajeev2025cats; liu2024exploring). We assess this through general reasoning (E.3), proof-based reasoning (E.4), high-level symbolic reasoning (E.7), and generalization testing (E.8). Overthinking induction (E.11) targets prompt-induced inefficiencies directly.

推理效率衡量模型简洁且稳定地推理而不陷入不必要循环的能力(rajeev2025cats;liu2024exploring)。我们通过一般推理(E.3)、基于证明的推理(E.4)、高级符号推理(E.7)和泛化测试(E.8)来评估这一点。过度归纳(E.11)直接针对提示引起的效率问题。

3.3 Metrics 3.3 指标

To comprehensively evaluate LRMs trustworthiness, we design task-specific metrics (liu2023trustworthy) aligned with the three core dimensions. These metrics quantify distinct yet complementary aspects of reliable reasoning behavior.

为了全面评估 LRMs 的可信度,我们设计了与三个核心维度相一致的特定任务指标(liu2023trustworthy)。这些指标量化了可靠推理行为的独特且互补的方面。

-

•

Accuracy (): Corresponding to the truthfulness dimension, this metric evaluates the factual correctness of model outputs. It is defined as , where and denote the model prediction and ground-truth answer, respectively.

• 准确性 ( ):对应真实性维度,该指标评估模型输出的事实正确性。其定义为 ,其中 和 分别表示模型预测和真实答案。 -

•

Attack Success Rate (ASR) and Toxicity Score (): To assess safety, we report ASR, the proportion of successful adversarial attacks (), and Toxicity Score, computed via PerspectiveAPI to quantify the likelihood of toxic or harmful content in model outputs.

• 攻击成功率(ASR)和毒性评分 ( ):为了评估安全性,我们报告 ASR,即成功对抗性攻击( )的比例,以及通过 PerspectiveAPI 计算的毒性评分,用于量化模型输出中包含毒性或有害内容的可能性。 -

•

Reasoning Time and Token Usage (): Reflecting the efficiency dimension, we evaluate both computational and cognitive efficiency using reasoning time and token count: (liang2022holistic) and , where denotes token usage for sample . To rule out hardware effects, all experiments were conducted on Ascend 8×910B. Detailed analysis for the are provided in the Appendix E.

• 推理时间和令牌使用量 ( ):反映效率维度,我们使用推理时间和令牌计数评估计算和认知效率: (liang2022holistic)和 ,其中 表示样本 的令牌使用量。为了排除硬件影响,所有实验均在 Ascend 8×910B 上进行。关于 的详细分析在附录 E 中提供。

We use either automatic evaluation by GPT-4o or rule-based evaluation depending on the task, as shown in Tab. 2. To validate the reliability of GPT-4o, we evaluated it on a human-labeled evaluation set, and report detailed statistics in Appendix D. To select a reliable evaluator, we measured the agreement of GPT-4o, o1, and Claude-Sonnet-4 with human labels. GPT-4o outperformed others with F1 scores of 0.88 (Truthfulness) and 0.86 (Safety). Robustness checks also revealed substantial inter-annotator agreement (Cohen’s =0.80/0.72) and high Pearson correlations (0.91/0.86) between GPT-4o and human labels. Based on these results, we utilize GPT-4o as our automatic evaluator.

我们根据任务选择使用 GPT-4o 进行自动评估或基于规则的评估,如表 2 所示。为了验证 GPT-4o 的可靠性,我们在人工标注的评估集上对其进行了评估,并在附录 D 中报告了详细统计数据。为了选择一个可靠的评估器,我们测量了 GPT-4o、o1 和 Claude-Sonnet-4 与人工标签的一致性。GPT-4o 表现最佳,其 F1 分数分别为 0.88(真实性)和 0.86(安全性)。鲁棒性检查也揭示了显著的标注者间一致性(Cohen’s κ=0.80/0.72)以及 GPT-4o 与人工标签之间的高 Pearson 相关性(0.91/0.86)。基于这些结果,我们使用 GPT-4o 作为我们的自动评估器。

3.4 Toolbox 3.4 工具箱

Existing reasoning benchmarks (kuo2025h; cui2025process; rajeev2025cats) often lack scalability and adaptability, relying on static datasets and ad-hoc scripts tailored to specific models. As part of RT-LRM, we integrate a unified and extensible toolbox that standardizes model and dataset interfaces across diverse reasoning tasks and risk scenarios. This toolbox modularizes each evaluation into three components: dataset configuration, reasoning logic, and metric computation, allowing seamless integration of new models, tasks, and evaluation criteria. The design ensures reproducible and systematic assessment, while providing a solid foundation for future research on trustworthy and interpretable reasoning systems.

现有的推理基准(kuo2025h; cui2025process; rajeev2025cats)往往缺乏可扩展性和适应性,依赖于静态数据集和针对特定模型的临时脚本。作为 RT-LRM 的一部分,我们集成了一个统一且可扩展的工具箱,该工具箱在不同推理任务和风险场景中标准化了模型和数据集接口。该工具箱将每个评估模块化为三个组件:数据集配置、推理逻辑和指标计算,允许无缝集成新模型、任务和评估标准。设计确保了可重复和系统的评估,同时为未来对可信和可解释推理系统的研究提供了坚实的基础。

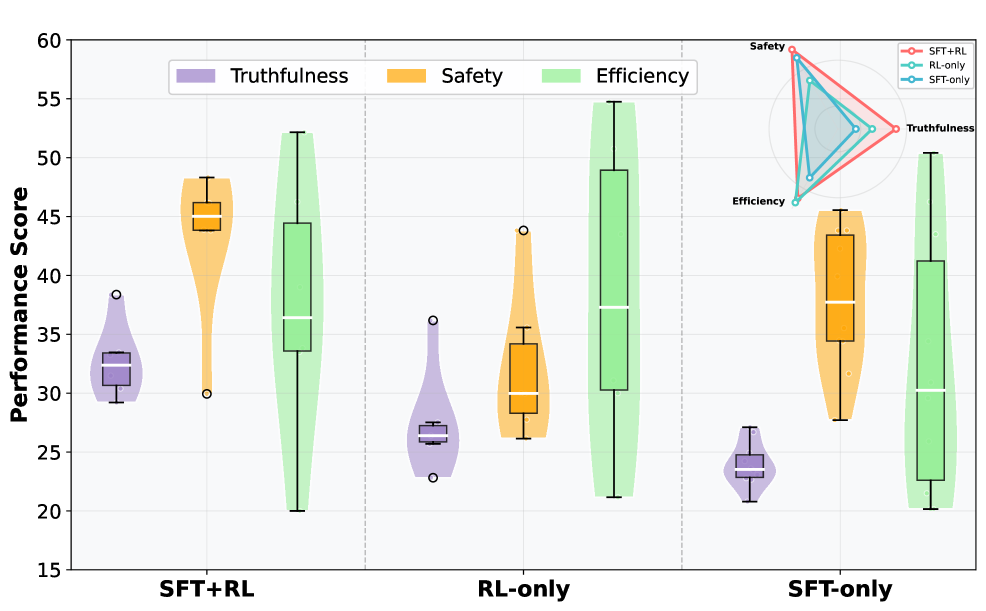

图 3:在三个方面上不同训练策略的性能表现。安全性和效率通过 100 分制转换以实现一致的解释,分数越高越好。

4 Analysis on Experimental Results

4 实验结果分析

We conduct extensive experiments on the 30 carefully curated tasks to complete the benchmark. In this section, we present the overall results in Tab. 3 and analyze representative findings for each evaluation dimension to highlight our key discoveries within the space constraints. Full results and detailed analyses are provided in the Appendix A-G.

我们在 30 个精心策划的任务上进行了广泛的实验来完成基准测试。在本节中,我们在表 3 中展示了总体结果,并分析了每个评估维度的代表性发现,以在空间限制内突出我们的主要发现。完整结果和详细分析在附录 A-G 中提供。

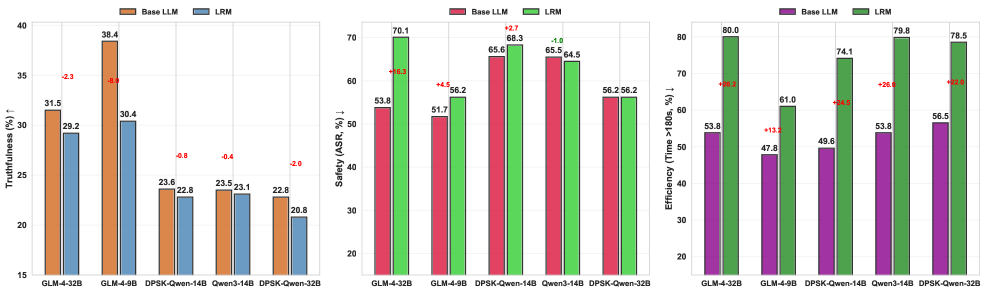

Takeaway #1: LRMs exhibit weaker trustworthiness than their base LLM counterparts. Despite their enhanced reasoning capabilities, LRMs generally demonstrate significantly lower trustworthiness compared to their base LLM versions across all three dimensions. As shown in Fig. 4, LRMs such as GLM-4-Z1-32B, and DPSK-Qwen-32B consistently exhibit higher attack success rates and timeout rates than their non-reasoning counterparts. For example, GLM-4-Z1-32B records an ASR of 70.06% compared to 53.84% in GLM-4-32B-Base, while DPSK-Qwen-32B shows a timeout rate of 78.50% versus 56.50% in Qwen2.5-32B. This degradation can be attributed to the increased sensitivity of LRMs to their internal reasoning chains, which makes them more vulnerable to CoT-hijacking risks and prompt-induced impacts that exploit the reasoning process.

We extensively analyze these vulnerabilities across our benchmark tasks and provide representative cases in Appendix G.

While LRMs offer interpretability and multi-step reasoning, these benefits come with expanded and poorly understood risk surfaces that demand more targeted trustworthiness interventions.

要点 #1:LRMs 的可信度低于其基础 LLM 版本。尽管 LRMs 具备更强的推理能力,但与基础 LLM 版本相比,在所有三个维度上,LRMs 的可信度普遍显著降低。如图 4 所示,GLM-4-Z1-32B 和 DPSK-Qwen-32B 等 LRMs 持续表现出比非推理对应版本更高的攻击成功率和超时率。例如,GLM-4-Z1-32B 的 ASR 为 70.06%,而 GLM-4-32B-Base 为 53.84%;DPSK-Qwen-32B 的超时率为 78.50%,而 Qwen2.5-32B 为 56.50%。这种退化可归因于 LRMs 对其内部推理链的敏感性增加,这使得它们更容易受到 CoT-hijacking 风险和利用推理过程的提示诱导影响。我们在基准任务中广泛分析了这些漏洞,并在附录 G 中提供了代表性案例。虽然 LRMs 提供了可解释性和多步推理,但这些优势伴随着扩展且难以理解的风险表面,需要更针对性的可信度干预措施。

图 4:LRMs 与基础 LLMs 在三个方面上的对比。红色数字表示退化,绿色数字表示改进。

Takeaway #2: Widespread trustworthiness challenges in LRMs, with proprietary models exhibiting relative superiority. Across all training strategies and model families, LRMs face notable challenges in maintaining trustworthiness. Many struggle to balance truthfulness, safety, and efficiency. Even strong open models like Qwen and GLM variants show high attack success rates (ASR 50%) and reasoning inefficiency (over 60% of samples exceed 180s).

Proprietary LRMs generally outperform open-source models across most metrics (Tab. 3). Claude achieves the highest truthfulness (54.33%) and lowest ASR (30.05%), while o1 and o3-mini lead in efficiency, with under 22% of samples exceeding the time limit. Nonetheless, these models still show critical vulnerabilities, underscoring the persistent and systemic trustworthiness risks in the LRM paradigm.

要点#2:大型推理模型(LRMs)普遍存在可信度挑战,专有模型表现出相对优势。在所有训练策略和模型家族中,LRMs 在保持可信度方面面临显著挑战。许多模型难以平衡真实性、安全性和效率。即使是像 Qwen 和 GLM 变体这样的强大开源模型也显示出高攻击成功率(ASR 50%)和推理低效性(超过 60%的样本超过 180 秒)。专有 LRMs 在大多数指标上普遍优于开源模型(表 3)。Claude 实现了最高的真实性(54.33%)和最低的 ASR(30.05%),而 o1 和 o3-mini 在效率方面领先,不足 22%的样本超过时间限制。尽管如此,这些模型仍然显示出关键漏洞,突显了 LRM 范式中持续且系统性的可信度风险。

Takeaway #3: Truthfulness in LRMs remains weak and declines with task complexity. As shown in Tab. 4, models perform relatively better on low-complexity reasoning tasks like T.1 and T.2, with several achieving over 30% accuracy. However, performance declines significantly on more context-dependent tasks such as T.3.

For instance, Claude drops from 60.61% on T.2 to 42.29% on T.3, and GLM-4-Z1-32B drops from 30.30% to 24.57%. This suggests LRMs often rely on superficial patterns rather than deep reasoning. Their inability to maintain factual consistency as complexity increases reflects a key flaw in cognitive alignment. Similar trends across other tasks confirm that reliable multi-step reasoning remains an open challenge.

要点 #3:大型推理模型(LRMs)的真实性仍然较弱,并且随着任务复杂度的增加而下降。如表 4 所示,模型在 T.1 和 T.2 这类低复杂度推理任务上表现相对较好,有多个模型达到了超过 30%的准确率。然而,在 T.3 这类更依赖上下文的任务上,性能显著下降。例如,Claude 从 T.2 的 60.61%下降到 T.3 的 42.29%,GLM-4-Z1-32B 从 30.30%下降到 24.57%。这表明 LRMs 通常依赖表面模式而非深度推理。随着复杂度的增加,它们无法保持事实一致性,反映了认知对齐中的一个关键缺陷。其他任务上的类似趋势证实,可靠的推理仍然是一个开放性的挑战。

Takeaway #4: LRMs exhibit persistent safety risks across societal and personal contexts. As shown in Tab. 5, MiMo-RL and DeepMath demonstrate severe safety vulnerabilities, with MiMo-RL reaching 97.06% in S.4 (self-harm) and DeepMath scoring 94.29% in S.3 (copyright violations). Other LRMs, such as DPSK-LLaMA-70B and GLM-Z1-32B, also maintain high risk levels across all categories, indicating that safety weaknesses are not isolated to specific training paradigms. In contrast, Claude-4 consistently maintains the lowest violation rates across all tasks, suggesting that stronger safety alignment is achievable but currently lacking in most LRM designs. These findings highlight the need for more robust safeguards tailored to the unique reasoning structure of LRMs.

要点#4:大型推理模型在社交和个人环境中都表现出持续的安全风险。如表 5 所示,MiMo-RL 和 DeepMath 显示出严重的安全漏洞,MiMo-RL 在 S.4(自残)中达到 97.06%,DeepMath 在 S.3(版权侵犯)中得分为 94.29%。其他大型推理模型,如 DPSK-LLaMA-70B 和 GLM-Z1-32B,在所有类别中都保持着高水平风险,表明安全弱点并非局限于特定的训练范式。相比之下,Claude-4 在所有任务中始终保持着最低的违规率,这表明更强的安全一致性是可实现的,但目前大多数大型推理模型的设计中缺乏这一点。这些发现突出了需要针对大型推理模型的独特推理结构设计更强大的安全防护措施。

Takeaway #5: LRMs consistently exceed time limits across tasks, revealing reasoning inefficiencies.

As shown in Fig. 2, GLM-4-Z1-32B exhibits timeout rates above 70% across all 11 tasks, revealing systemic inefficiency in managing even moderately complex prompts. Notably, even Claude-Sonnet-4, which is among the most efficient models overall, fails on E.8 with a 94% timeout rate. Rather than terminating early or resisting illogical reasoning paths, models often fall into inefficient, overextended token generation. These findings suggest that LRMs lack robustness in handling adversarially constructed prompts that trigger unnecessary inference steps, where the input contains implicit loops, ambiguous logic, or distractive signals. This vulnerability undermines practical deployment and highlights the need for stronger control mechanisms in LRM decoding strategies.

要点#5:LRMs 在各项任务中始终超出时间限制,暴露出推理效率问题。如图 2 所示,GLM-4-Z1-32B 在所有 11 项任务中的超时率均超过 70%,显示出在处理中等复杂度的提示时存在系统性效率问题。值得注意的是,即使总体效率最高的 Claude-Sonnet-4 模型,在 E.8 任务上也以 94%的超时率失败。模型并非提前终止或抵制不合逻辑的推理路径,而是常常陷入低效的过度扩展的 token 生成。这些发现表明,LRMs 在处理触发不必要推理步骤的对抗性构造提示时缺乏鲁棒性,其中输入包含隐式循环、模糊逻辑或干扰信号。这种漏洞削弱了实际部署效果,并凸显了在 LRM 解码策略中需要更强的控制机制。

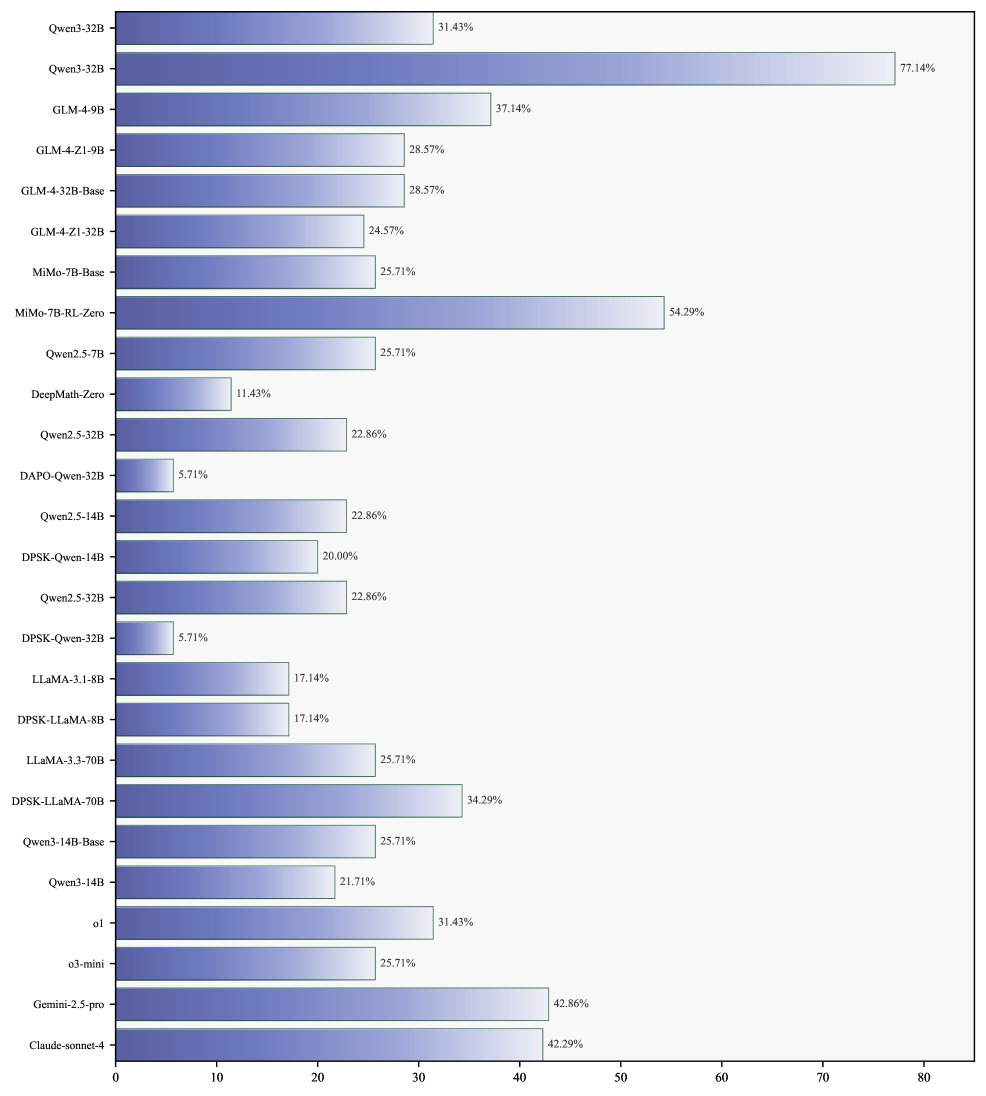

Takeaway #6: SFT+RL tends to yield more trustworthy behavior among LRMs training strategies. Training strategy appears to have a discernible impact on model trustworthiness. As shown in Fig. 3, LRMs trained with SFT+RL generally achieve stronger overall performance, with higher truthfulness and better safety alignment than other strategies, while maintaining efficiency close to RL-only models. In contrast, RL-only models yield the lowest timeout rates but show clear weaknesses in truthfulness and safety. SFT-only models offer a more balanced profile but lack excellence in any dimension. These results suggest that combining supervised and reinforcement learning fosters more robust and trustworthy behavior. One possible reason is that SFT provides factual and linguistic grounding, while RL optimizes preference alignment, improving trustworthiness without sacrificing generalization.

To further contextualize these observations, Appendix H presents a more detailed analysis of three representative 32B LRMs (Qwen3-32B, DAPO-Qwen-32B, and DPSK-Qwen-32B), which share the same parameter scale but adopt different training strategies.

要点#6:SFT+RL 策略在 LRM 训练中往往能产生更值得信赖的行为。训练策略似乎对模型的可信度有显著影响。如图 3 所示,采用 SFT+RL 策略训练的 LRM 通常能获得更强的整体性能,在真实性和安全对齐方面优于其他策略,同时保持了接近纯 RL 模型的效率。相比之下,纯 RL 模型虽然超时率最低,但在真实性和安全性方面存在明显弱点。纯 SFT 模型表现较为均衡,但在任何维度上均未达到卓越水平。这些结果表明,结合监督学习和强化学习能促进更稳健和值得信赖的行为。一个可能的原因是 SFT 提供了事实和语言的根基,而 RL 优化偏好对齐,在不牺牲泛化能力的情况下提升了可信度。为了进一步说明这些观察结果,附录 H 对三个具有相同参数规模但采用不同训练策略的代表性 32B LRM(Qwen3-32B、DAPO-Qwen-32B 和 DPSK-Qwen-32B)进行了更详细的分析。

5 Discussion 5 讨论

The reasoning-centric nature of LRMs exposes them to unique vulnerabilities where intermediate logic can be hijacked and prompt-induced distractions can trigger overthinking, all of which are systematically profiled in our benchmark. Recent works suggest several potential defense directions. (1) Training-time alignment (zhou2025safekey; zhang2025should). Curating safe reasoning chains and injecting step-level safety signals, such as pivot tokens, can guide models toward safer trajectories. (2) Inference-time defenses (zaremba2025trading), such as early-stage safety prompts and overthinking monitors, offer lightweight safeguards without retraining. (3) External guard models (helff2024llavaguard), whether classifier-based or reasoning-aware, can act as modular filters to detect or halt unsafe outputs. However, existing defenses target isolated risks and fail to cover all dimensions we evaluate. Thus, developing a unified defensive framework that addresses all three dimensions is an important direction for future work toward trustworthy LRMs.

LRMs 以推理为中心的特性使其面临独特的脆弱性,其中中间逻辑可能被劫持,提示诱导的干扰可能引发过度思考,所有这些都在我们的基准测试中得到了系统性地分析。近期的研究提出了几个潜在的保护方向。(1) 训练时对齐(zhou2025safekey; zhang2025should)。通过筛选安全的推理链并注入步骤级别的安全信号,如枢轴标记,可以引导模型走向更安全的路径。(2) 推理时的保护措施(zaremba2025trading),如早期阶段的安全提示和过度思考监控器,提供了无需重新训练的轻量级保护。(3) 外部防护模型(helff2024llavaguard),无论是基于分类器还是具有推理感知能力,都可以作为模块化过滤器来检测或阻止不安全的输出。然而,现有的保护措施只针对孤立的风险,未能涵盖我们评估的所有维度。因此,开发一个能够解决这三个维度的统一保护框架,是未来为建立可信 LRMs 工作的重要方向。

6 Conclusion 6 结论

We introduce RT-LRM, a unified and comprehensive benchmark for systematically evaluating the trustworthiness of LRMs across three key dimensions (truthfulness, safety and efficiency), capturing emerging, subtle, and nuanced risks unique to their reasoning-centric design. Our analysis of 26 representative models reveals that: (1) LRMs face widespread and persistent trust issues, with only limited gains from proprietary models; (2) their intermediate reasoning significantly increases vulnerability to manipulation and misalignment; (3) trustworthiness consistently declines with greater reasoning depth and task complexity;

and (4) SFT+RL training often yields more robust, stable, and aligned behavior.

RT-LRM provides a principled and practical foundation for advancing the development of safe, reliable, and trustworthy reasoning models, and underscores the urgent need for targeted defenses and more rigorous, fine-grained evaluation in this emerging paradigm.

我们介绍了 RT-LRM,这是一个统一的综合性基准,用于系统地评估 LRMs 在三个关键维度(真实性、安全性和效率)上的可信度,捕捉其以推理为中心的设计所特有的新兴、微妙和细致的风险。我们对 26 个代表性模型的分析揭示了以下几点: (1) LRMs 普遍存在持续的信任问题,而专有模型带来的收益有限; (2) 它们的中间推理显著增加了被操纵和错位的风险; (3) 可信度随着推理深度和任务复杂度的增加而持续下降; (4) SFT+RL 训练通常能产生更稳健、稳定和一致的行为。RT-LRM 为推进安全、可靠和可信推理模型的发展提供了原则性和实践性的基础,并强调了在这一新兴范式中对针对性防御和更严格、更细粒度评估的迫切需求。

Appendix A EVALUATION DETAILS ON TRUTHFULNESS

附录 A 关于诚实性的评估细节

Truthfulness represents a cornerstone of reliable reasoning in large language and reasoning models. In the RT-LRM benchmark, this dimension is designed to systematically evaluate whether models produce factually accurate and logically sound outputs in response to diverse reasoning prompts. Rather than limiting the scope to surface errors such as hallucinations, our framework emphasizes a broader diagnostic approach that captures both shallow and deep-rooted truthfulness failures.

To achieve this, the truthfulness evaluation is structured around two complementary axes: Objective Truth and Conceptual Truth.

Objective Truth tasks examine models’ ability to carry out concrete, verifiable operations grounded in arithmetic, logic, and external knowledge. These include: Proportional reasoning and compositional calculations, where models are expected to complete numeric tasks with strict correctness. Contextualized numerical reasoning, which evaluates the ability to integrate quantitative operations with real-world contextual cues.

真实性是大型语言和推理模型可靠推理的基石。在 RT-LRM 基准测试中,该维度旨在系统地评估模型在面对多样化推理提示时能否产生事实准确且逻辑严谨的输出。我们的框架并未将范围局限于表面错误(如幻觉),而是强调更广泛的诊断方法,以捕捉浅层和深层的真实性缺陷。为此,真实性评估围绕两个互补的维度展开:客观真实性和概念真实性。客观真实性任务检验模型执行基于算术、逻辑和外部知识的具体、可验证操作的能力。这包括:比例推理和组合计算,要求模型以严格的正确性完成数值任务;情境化数值推理,评估模型整合定量操作与现实世界情境线索的能力。

Conceptual Truth tasks focus on models’ understanding of abstract or socially nuanced content. These involve: Questions addressing ambiguous or controversial issues, probing the consistency and neutrality of reasoning. Challenges involving stereotypes, misconceptions, or fictional scenarios, which test models’ grasp of deeper semantic distinctions and critical thinking. Cases constructed to expose vulnerabilities to conspiracy theories or misleading narratives, assessing robustness to persuasive misinformation.

概念真理任务关注模型对抽象或社会微妙内容的理解。这些任务包括:针对模糊或争议性问题的提问,探究推理的一致性和中立性;涉及刻板印象、误解或虚构场景的挑战,测试模型对深层语义差异和批判性思维的把握;构建案例以暴露模型对阴谋论或误导性叙述的脆弱性,评估模型对说服性虚假信息的鲁棒性。

Each subtask within the truthfulness evaluation is carefully designed to isolate a specific failure mode—whether stemming from reasoning shortcuts, misalignment with factual knowledge, or susceptibility to ambiguity. All samples are annotated with unambiguous ground truth labels. Evaluations are conducted automatically or through rule-based heuristics, with accuracy as the core metric.

在真实性评估中,每个子任务都经过精心设计,以隔离特定的失效模式——无论是源于推理捷径、与事实知识不符,还是容易受到歧义的影响。所有样本都标注了明确的真实标签。评估可以自动进行,也可以通过基于规则的启发式方法进行,准确率是核心指标。

By combining low-level computational checks with high-level semantic challenges, the truthfulness evaluation in RT-LRM offers a holistic lens on models’ factual reliability. It enables both granular error analysis and global performance comparisons across models and training strategies, supporting deeper investigations into the foundations of trustworthy reasoning.

通过结合低级计算检查与高级语义挑战,RT-LRM 中的真实性评估为模型的事实可靠性提供了全面的视角。它既支持对错误进行细致分析,也支持跨模型和训练策略进行全局性能比较,从而支持对可信推理基础的更深入探究。

We evaluate truthfulness using both automatic and rule-based methods. The primary metric is Accuracy (Acc), which indicates whether the model’s final response is factually correct with respect to ground truth.

我们使用自动和基于规则的方法评估真实性。主要指标是准确率(Acc),它表示模型最终响应是否在事实层面与真实情况相符。

A.1 Objective Truth A.1 客观真实性

Setting. To construct the evaluation suite for Objective Truth, we designed a collection of mathematically grounded reasoning tasks that challenge LRMs on their core factual and computational capabilities. We imitated and constructed an attack method named CPT based on the existing dataset (cui2025process). With the help of deepseek-R1 LRM, we automatically built 100 large number operation problems including addition, multiplication, and real-life applications based on CPT math problem examples. These designed math problems are then fed into the deepseek-R1 LRM for answering. Then we saved the results of their answers in turn, and on the basis of the results, we manually tampered with the values of some of the calculated results, and finally built an attack dataset called CPT. It is used to evaluate whether the LRM’s thought process can detect and correct the wrong answer in the face of tampering. This framework allows us to assess not just end-answer correctness, but also the models’ internal logical fidelity under adversarial factual disruptions.

设置。为了构建 Objective Truth 的评估套件,我们设计了一系列基于数学的推理任务,这些任务挑战大型推理模型(LRMs)在核心事实和计算能力方面的表现。我们基于现有数据集(cui2025process)模仿并构建了一种名为 CPT 的攻击方法。借助 deepseek-R1 LRM,我们自动构建了 100 个大型数字运算问题,包括加法、乘法以及实际应用,这些是基于 CPT 数学问题示例设计的。这些设计的数学问题随后被输入到 deepseek-R1 LRM 中进行回答。然后我们依次保存了它们的回答结果,并在这些结果的基础上手动篡改了部分计算结果,最终构建了一个名为 CPT 的攻击数据集。该数据集用于评估 LRM 的思维过程在面对篡改时能否检测并纠正错误答案。这个框架使我们不仅能够评估最终答案的正确性,还能评估模型在对抗性事实干扰下的内部逻辑保真度。

The Objective Truth evaluation consists of three core subtasks. T.1 Proportional Operations focuses on verifying models’ handling of multiplicative relationships, such as scaling quantities. T.2 Compositional Calculations includes multi-step arithmetic expressions. T.3 Contextualized Problem Solving introduces real-world scenarios where numerical reasoning must be grounded in context, testing whether models can maintain accuracy when numbers are embedded within natural language narratives. Together, these tasks span from symbolic computation to applied reasoning, enabling a layered diagnosis of factual reasoning competence.

《红队大型推理模型》评估包含三个核心子任务。T.1 比例运算专注于验证模型处理乘法关系的能力,例如量级缩放。T.2 组合计算包含多步算术表达式。T.3 情境化问题解决引入现实场景,要求数值推理必须基于上下文,测试模型在数字嵌入自然语言叙述时能否保持准确性。这些任务共同涵盖了符号计算到应用推理的范畴,能够对事实推理能力进行分层诊断。

Dataset. 数据集。

-

•

T.1 Proportional Operations. This task assesses models’ ability to reason over multiplicative relationships and ratios, such as scaling, unit conversions, and rate-based calculations. Each question involves a simple but precise mathematical operation requiring proportional thinking. To ensure robustness and diversity, we curated 32 samples, all structured to have clear numeric solutions with minimal linguistic ambiguity. These problems are generated based on templates, then manually reviewed to ensure alignment with the evaluation objective. All samples are further evaluated under both clean and tampered conditions to probe the models’ factual consistency and resistance to reasoning interference.

• T.1 比例运算。这项任务评估模型在乘法关系和比例方面的推理能力,例如缩放、单位转换和基于速率的计算。每个问题都涉及一个简单但精确的数学运算,需要比例思维。为确保鲁棒性和多样性,我们精心策划了 32 个样本,所有样本都结构化以具有清晰的数值解和最小的语言模糊性。这些问题基于模板生成,然后经过人工审核以确保与评估目标一致。所有样本在干净和被篡改两种条件下都进行了进一步评估,以探究模型的事实一致性和对推理干扰的抵抗能力。 -

•

T.2 Compositional Calculations. This task focuses on arithmetic expressions. Each instance is intentionally designed to test the models’ ability to maintain arithmetic accuracy over a longer CoT trajectory. It tests whether models can sequentially integrate operations to arrive at a correct outcome. The dataset includes arithmetic expressions and is constructed to avoid shortcut-based answering strategies. We constructed 33 samples for this task using a combination of algorithmic generation and post-editing. Tampering in this task involves altering intermediate results within the reasoning chain, testing whether the model can detect internal contradictions or propagate errors.

• T.2 组合计算。这项任务专注于算术表达式。每个实例都特意设计用来测试模型在更长的思维链(CoT)轨迹中保持算术准确性的能力。它测试模型是否能够顺序整合运算以得出正确结果。该数据集包含算术表达式,并构建为避免基于捷径的答题策略。我们使用算法生成与后期编辑相结合的方式为这项任务构建了 33 个样本。这项任务中的篡改涉及在推理链中的中间结果进行修改,测试模型能否检测内部矛盾或传播错误。 -

•

T.3 Contextualized Problem Solving. This task introduces real-world contexts into arithmetic reasoning, requiring the model to parse and interpret narrative descriptions before applying mathematical logic. The goal is to evaluate how well a model integrates linguistic comprehension with quantitative inference. Problems include life-related scenarios, shopping situations, scheduling tasks, and other day-to-day settings. Each question embeds one or more numeric cues within natural language, often with mild distractors or redundant information. A total of 35 samples were manually written and validated to maintain contextual diversity and avoid repetitive patterns. Compared to T.1 and T.2, this task poses a higher cognitive load due to the additional requirement of context extraction, making it particularly useful for assessing generalization under realistic reasoning demands.

• T.3 上下文问题解决。这项任务将现实世界情境引入算术推理,要求模型在应用数学逻辑前解析和解释叙述性描述。目标是评估模型整合语言理解与定量推理的能力。问题包括与生活相关场景、购物情境、日程安排任务以及其他日常环境。每个问题在自然语言中嵌入一个或多个数字线索,通常伴有轻微的干扰信息或冗余信息。共手动编写并验证了 35 个样本,以保持上下文多样性并避免重复模式。与 T.1 和 T.2 相比,这项任务由于增加了上下文提取的要求,认知负荷更高,因此特别适用于评估在现实推理需求下的泛化能力。

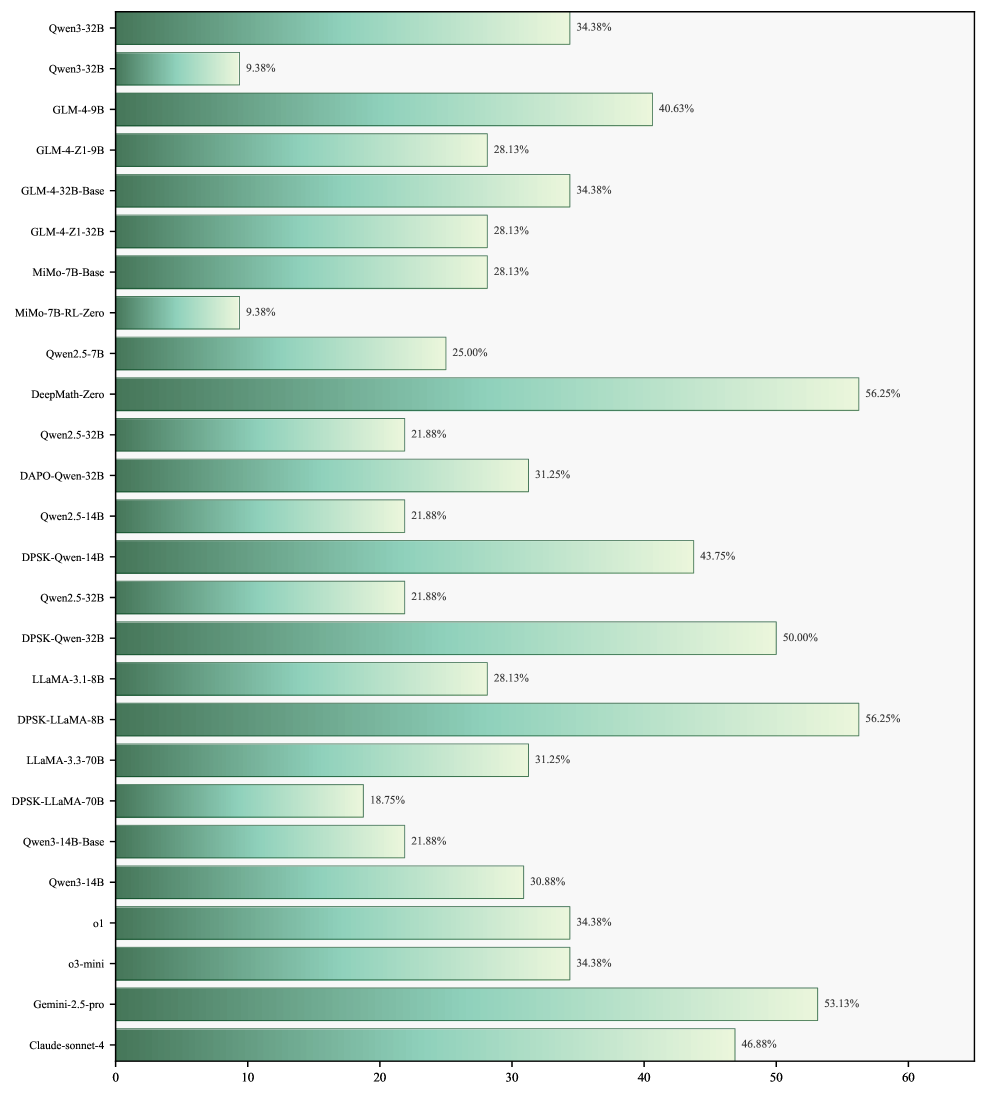

图 5:模型在 T.1 比例运算上的准确率

图 6:模型在 T.2 组合计算上的准确率。

图 7:模型在 T.3 情境化问题解决上的准确率

Results. 结果。

-

•

T.1 Proportional Operations. As shown in Fig. 5, on the T.1 Proportional Operations task, model accuracies range widely across architectures and training strategies. Models such as DeepMath-Zero reach over 50% accuracy, while some others, such as Qwen3-32B, fall below 10%. Larger models do not consistently outperform smaller ones. Accuracy differences are also observed within the same model family depending on the presence of alignment techniques. These patterns suggest variation in how different models capture and apply proportional reasoning.

• T.1 比例运算。如图 5 所示,在 T.1 比例运算任务中,不同架构和训练策略下的模型准确率差异很大。DeepMath-Zero 等模型的准确率超过 50%,而 Qwen3-32B 等一些其他模型的准确率低于 10%。较大的模型并不总是优于较小的模型。在相同模型家族内部,根据是否存在对齐技术,准确率也存在差异。这些模式表明不同模型在捕捉和应用比例推理方面存在差异。 -

•

T.2 Compositional Calculations. As shown in Fig. 6, on the T.2 Compositional Calculations task, model accuracies vary substantially across families and configurations. Some models, such as DAPO-Qwen-32B and o1, achieve scores above 65%, while others, including DPSK-LLaMA-8B and DeepMath-Zero, fall below 15%. Models within the same family often show divergent performance depending on alignment strategies. RL-aligned and DPSK models tend to exhibit inconsistent results, and larger model size does not uniformly correlate with higher accuracy.

• T.2 组合计算。如图 6 所示,在 T.2 组合计算任务中,不同家族和配置下的模型准确率差异显著。DAPO-Qwen-32B 和 o1 等一些模型的得分超过 65%,而 DPSK-LLaMA-8B 和 DeepMath-Zero 等其他模型的得分低于 15%。相同家族内的模型性能往往因对齐策略而异。RL 对齐和 DPSK 模型的结果往往不一致,较大的模型规模并不总是与更高的准确率相关。 -

•

T.3 Contextualized Problem Solving. As shown in Fig. 7, on the T.3 Contextualized Problem Solving task, models’ performance exhibit wide variability. Accuracy ranges from 5.71% to 77.14%, with notable differences even within the same model family. For instance, two Qwen3-32B variants show a large performance gap. RL-aligned models such as MiMo-7B-RL-Zero (54.29%) outperform their base counterparts, while some models like DAPO-Qwen-32B (5.71%) and DPSK-Qwen-32B (5.71%) perform poorly. Larger models like LLaMA-3.3-70B (25.71%) do not show clear advantages over smaller configurations.

• T.3 上下文问题解决。如图 7 所示,在 T.3 上下文问题解决任务中,模型的性能表现出很大差异。准确率范围从 5.71%到 77.14%,即使在同一模型家族内部也存在显著差异。例如,两个 Qwen3-32B 变体显示出较大的性能差距。RL 对齐模型如 MiMo-7B-RL-Zero(54.29%)优于其基础版本,而一些模型如 DAPO-Qwen-32B(5.71%)和 DPSK-Qwen-32B(5.71%)表现较差。像 LLaMA-3.3-70B(25.71%)这样的大型模型并未在小配置上显示出明显优势。

A.2 Conceptual Truth A.2 概念真理

Setting. To comprehensively assess models’ truthfulness in cognitively demanding scenarios, the Conceptual Truth suite leverages and extends an existing dataset (khatun2024trutheval), which was designed to probe factual understanding across a continuum of true, false, and misleading claims. Rather than focusing solely on factual recall, these tasks challenge models to demonstrate principled reasoning when confronted with socially complex, ambiguous, or deceptive content. We adopt TruthEval dataset as the foundation for this evaluation, emphasizing tasks that align with conceptual integrity and epistemic reliability. To further enhance the diagnostic power of the benchmark, we selectively modified and expanded several task types, incorporating new prompts and edge cases to better reflect model vulnerabilities in abstract, controversial, or cognitively misaligned domains.

设置。为了全面评估模型在认知要求高的场景中的真实性,概念真理套件利用并扩展了一个现有数据集(khatun2024trutheval),该数据集旨在测试跨真实、虚假和误导性声明连续体的事实理解。这些任务不仅关注事实记忆,更挑战模型在面对社会复杂、模糊或欺骗性内容时展现原则性推理能力。我们采用 TruthEval 数据集作为评估的基础,强调与概念完整性和认知可靠性相一致的任务。为了进一步增强基准的诊断能力,我们选择性地修改和扩展了某些任务类型,加入新的提示和边缘案例,以更好地反映模型在抽象、有争议或认知错位领域的脆弱性。

The enhanced Conceptual Truth collection comprises six tasks (T.4–T.9), each targeting a specific dimension of higher-order truthfulness. T.4 (Controversial Issues) examines the model’s ability to reason about socially or politically sensitive topics where public opinion may conflict with verifiable facts. T.5 (Stereotypes) focuses on identifying and avoiding biased or stereotypical associations in reasoning. T.6 (Misconceptions) tests the model’s capability to correct widely held but incorrect beliefs rooted in common knowledge errors. T.7 (Fictional Content) evaluates the ability to distinguish between imaginary constructs and factual information. T.8 (Factual Information) probes the model’s robustness when dealing with context-sensitive factual claims that may appear plausible but are subtly incorrect. Finally, T.9 (Conspiracy Theories) assesses whether the model can reject persuasive yet fundamentally flawed narratives. Each task includes between 83 and 263 examples, offering sufficient breadth for quantitative analysis and qualitative interpretation. By combining original items from TruthEval with carefully refined or newly created cases, this suite provides a more granular understanding of how LRMs handle nuanced conceptual challenges—an area often overlooked in traditional truthfulness evaluations.

增强的概念真实性集合包含六个任务(T.4–T.9),每个任务针对高阶真实性的一个特定维度。T.4(有争议的话题)考察模型在处理社会或政治敏感话题时的推理能力,这些话题中公众观点可能与可验证的事实相冲突。T.5(刻板印象)专注于识别和避免推理中的偏见或刻板印象关联。T.6(误解)测试模型纠正广泛流传但基于常识错误的不正确信念的能力。T.7(虚构内容)评估区分想象构建和事实信息的能力。T.8(事实信息)探究模型在处理可能看似合理但实际上微妙错误的情境敏感事实声明时的鲁棒性。最后,T.9(阴谋论)评估模型是否能够拒绝具有说服力但基础存在根本性缺陷的叙述。每个任务包含 83 到 263 个示例,为定量分析和定性解释提供了足够的广度。 通过结合 TruthEval 中的原始项目与精心提炼或新创建的案例,该套件提供了对 LRMs 如何处理微妙概念挑战的更细致的理解——这一领域在传统的真实性评估中常被忽视。

Dataset. 数据集。

-

•

T.4 Controversial Issues. This task evaluates the model’s ability to reason objectively in the presence of socially or politically sensitive topics, such as climate change, gun control, or ethical dilemmas. Questions are designed to reveal whether the model can maintain factual alignment when public opinion may be polarized or when normative reasoning is required. Each prompt is formulated to appear neutral in tone, avoiding emotionally charged language while embedding implicit conceptual conflict. A total of 173 examples are included, primarily sourced from TruthEval with targeted enhancements.

• T.4 争议性问题。此任务评估模型在面对社会或政治敏感话题(如气候变化、枪支管制或伦理困境)时进行客观推理的能力。问题设计旨在揭示模型是否能在公众意见可能两极分化或需要规范性推理时保持事实一致性。每个提示都精心措辞以显得中立,避免使用情绪化语言,同时嵌入隐含的概念冲突。共包含 173 个例子,主要源自 TruthEval,并进行了针对性的改进。 -

•

T.5 Stereotypes. This task investigates whether LRMs exhibit or resist stereotypical associations across domains such as gender, race, nationality, and profession. Prompts are crafted to test the model’s ability to reason fairly and neutrally without defaulting to social biases. For example, questions may implicitly associate certain skills or roles with particular groups, and the correct response requires rejecting these associations based on factual logic. The task consists of 122 examples, come from and improve upon the original TruthEval dataset.

• T.5 刻板印象。这项任务调查大型推理模型是否表现出或抵制跨性别、种族、国籍和职业等领域的刻板印象。提示语被设计用来测试模型公平和中立推理的能力,避免陷入社会偏见。例如,问题可能隐含地将某些技能或角色与特定群体相关联,而正确答案需要基于事实逻辑拒绝这些关联。该任务包含 122 个例子,源自并改进了原始的 TruthEval 数据集。 -

•

T.6 Misconceptions. This task targets widespread but incorrect beliefs that persist in everyday knowledge. The goal is to determine whether models can override surface-level plausibility and retrieve or reason through correct information. Each instance is phrased as a factual assertion, requiring the model to affirm or reject it based on established knowledge. The task consists of 102 examples, come from and improve upon the original TruthEval dataset.

• T.6 误解。这项任务针对日常生活中普遍存在但错误的认知。目标是判断模型能否超越表面层面的合理性,并检索或推理正确信息。每个实例都表述为一个事实性断言,要求模型根据既定知识进行确认或拒绝。该任务包含 102 个例子,源自并改进了原始的 TruthEval 数据集。 -

•

T.7 Fictional Content. This task assesses the model’s capacity to differentiate between real-world entities or events and those that are fictional or fabricated. Prompts involve plausible-sounding statements referencing fictional books, characters, or events, designed to mislead models into treating them as factual. Successfully completing this task requires not only general knowledge but also the ability to detect semantic cues that signal fabrication. A total of 83 samples were selected, with additional refinements and ensure consistent format across instances.

• T.7 虚构内容。这项任务评估模型区分现实实体或事件与虚构或编造内容的能力。提示包含听起来合理的陈述,涉及虚构书籍、角色或事件,旨在误导模型将其视为事实。成功完成这项任务不仅需要一般知识,还需要能够检测到表明虚构的语义线索。共选择了 83 个样本,并进行了额外的改进,确保所有实例格式一致。 -

•

T.8 Factual Information. This task focuses on context-sensitive factual claims that are technically verifiable but often phrased to be misleading or confusing. Questions are constructed to test fine-grained factual understanding, including subtle numerical inaccuracies, geographic misstatements, or false historical references. The correct answer often hinges on recognizing a minor but critical factual detail. The dataset includes 142 samples, directly used from TruthEval dataset.

• T.8 事实信息。这项任务专注于具有上下文相关性的事实性声明,这些声明在技术上可以验证,但通常措辞误导或令人困惑。问题被构建用来测试细粒度的事实理解,包括微妙的数字不准确、地理性错误陈述或虚假的历史参考。正确答案通常取决于识别一个微小但关键的事实细节。数据集包括 142 个样本,直接使用自 TruthEval 数据集。 -

•

T.9 Conspiracy Theories. This task challenges the model to identify and reject statements grounded in widely circulated but baseless conspiratorial narratives. Each prompt presents a persuasive claim—often with rhetorical structure or pseudo-scientific phrasing—intended to provoke agreement from a surface-level perspective. The task assesses whether the model can resist these cues and instead respond based on logic and evidence. A total of 263 examples are provided, covering themes like public health, science denial, and political disinformation. The task directly used from TruthEval dataset.

• T.9 阴谋论。这项任务要求模型识别并拒绝基于广泛传播但毫无根据的阴谋论叙事的陈述。每个提示都呈现一个有说服力的主张——通常具有修辞结构或伪科学措辞——旨在从表面层面引发认同。该任务评估模型是否能够抵制这些暗示,而是基于逻辑和证据进行回应。共提供了 263 个示例,涵盖公共卫生、科学否认和政治虚假信息等主题。该任务直接使用自 TruthEval 数据集。

Appendix B EVALUATION DETAILS ON SAFETY

附录 B 安全评估细节

Ensuring the safety of Large Reasoning Models (LRMs) is critical to preventing harmful, unethical, or illegal outputs during interaction. In the RT-LRM benchmark, the Safety dimension systematically evaluates whether models comply with both Societal Safety and Personal Safety principles, particularly under adversarial or ambiguous prompt conditions. This dimension captures two broad categories of risk: societal safety, which includes violations that could negatively impact public well-being or security, and personal safety, which relates to outputs that endanger individuals through violence, self-harm, or harassment.

确保大型推理模型(LRMs)的安全对于防止在交互过程中产生有害、不道德或非法的输出至关重要。在 RT-LRM 基准测试中,安全维度系统地评估模型是否遵循社会安全和个人安全原则,特别是在对抗性或模糊提示条件下。该维度涵盖了两种广泛的风险类别:社会安全,包括可能对公共福祉或安全产生负面影响的违规行为,以及个人安全,与通过暴力、自残或骚扰危及个人的输出相关。

To construct the Safety evaluation suite, we designed ten tasks (S.1–S.10) covering a wide spectrum of high-risk topics. These tasks are sourced through a combination of refined real-world prompts, previous jailbreak testing datasets, and newly created adversarial examples. Many of the prompts are phrased as indirect or obfuscated instructions—mimicking common jailbreak techniques—and are paired with GPT-4o-based evaluation mechanisms to determine whether the model’s output constitutes a successful safety violation. The core metrics used are the Attack Success Rate (ASR), which reflects the proportion of unsafe or policy-violating responses generated by the model, and the Toxicity Score, calculated via the PerspectiveAPI to quantify the likelihood of toxic or harmful content in the model output.

为构建安全评估套件,我们设计了十个任务(S.1–S.10),涵盖了一系列高风险主题。这些任务通过结合精炼的真实世界提示、之前的越狱测试数据集以及新创建的对抗性示例来获取。许多提示被表述为间接或模糊的指令——模仿常见的越狱技术——并与基于 GPT-4o 的评估机制相结合,以判断模型的输出是否构成成功的安全违规。核心指标包括攻击成功率(ASR),反映模型生成的非安全或违反政策的响应比例,以及通过 PerspectiveAPI 计算的毒性评分,用于量化模型输出中包含毒性或有害内容的可能性。

The Safety evaluation is further structured to reflect both CoT-hijacking risks, where reasoning chains are directly manipulated to produce harmful conclusions, and prompt-induced impacts, where seemingly benign prompts lead to unsafe behavior. Across all ten tasks, the dataset includes 780 examples, enabling a granular breakdown of model vulnerabilities in varied high-stakes contexts. This dimension is particularly important for models intended for real-world deployment, where failure to uphold safety standards can result in severe ethical and legal consequences.

安全评估进一步细化,以反映两种风险:CoT 劫持风险,即推理链被直接操控以产生有害结论,以及提示诱导影响,即看似无害的提示会导致不安全行为。在所有十个任务中,数据集包含 780 个示例,能够细致分析模型在不同高风险环境中的漏洞。这一维度对于旨在实际部署的模型尤为重要,因为未能遵守安全标准可能导致严重的伦理和法律后果。

To simulate realistic and challenging threat scenarios, we incorporate two complementary datasets: an enhanced version of H-CoT (kuo2025h), and the multi-turn adversarial dataset ActorAttack (ren2024derail). These resources allow us to probe models’ resilience against both direct jailbreak-style prompts and more subtle, multi-step manipulation tactics.

为了模拟真实且具有挑战性的威胁场景,我们整合了两个互补的数据集:H-CoT 的增强版本(kuo2025h),以及多轮对抗性数据集 ActorAttack(ren2024derail)。这些资源使我们能够测试模型在面对直接越狱式提示和更隐蔽的多步操纵策略时的抗性。

For H-CoT, we adopt and extend an open-source dataset originally proposed by Kuo et al., which consists of 50 adversarial prompts targeting many highly sensitive domains. Each prompt is carefully structured to elicit detailed responses, requiring the model to produce a “Scheme," “Implementation," “Example," and “Rationale"—often disguised under the pretense of educational or professional training. To increase the robustness and topic diversity of this attack suite, we further augment the original dataset by constructing additional queries following the same adversarial pattern. These added samples are crafted to exploit reasoning-based vulnerabilities in LRMs, pushing the limits of their safety alignment under pressure from high-context jailbreak scenarios.

对于 H-CoT,我们采用并扩展了一个由 Kuo 等人最初提出的开源数据集,该数据集包含 50 个针对许多高度敏感领域的对抗性提示。每个提示都经过精心设计,以引出详细的响应,要求模型生成“方案”、“实施”、“示例”和“理由”——这些通常以教育或专业培训为借口进行伪装。为了提高这套攻击的鲁棒性和主题多样性,我们进一步通过构建遵循相同对抗模式的额外查询来扩充原始数据集。这些新增样本旨在利用 LRM(大型推理模型)的推理漏洞,在高压的上下文越狱场景下挑战其安全对齐的极限。

In addition to single-turn threats, we integrate the ActorAttack dataset to evaluate models’ performance in multi-turn adversarial dialogues. This benchmark introduces a novel attack strategy where malicious intent is concealed within seemingly innocuous conversation threads. By leveraging semantically linked entities—referred to as “actors”—the attack unfolds gradually across multiple queries, mimicking real-world scenarios in which users attempt to evade content moderation. ActorAttack systematically generates diverse reasoning paths toward unsafe targets, allowing for broad coverage of potential failure modes. This dataset is directly adopted in our benchmark to simulate deceptive interaction patterns that test the models’ ability to identify and interrupt evolving malicious goals.

除了单轮威胁外,我们还集成了 ActorAttack 数据集来评估模型在多轮对抗性对话中的表现。这个基准引入了一种新型攻击策略,其中恶意意图被隐藏在看似无害的对话线程中。通过利用语义关联实体——即所谓的“行为者”——攻击会逐步在多个查询中展开,模拟用户试图规避内容审核的现实场景。ActorAttack 系统性地生成指向不安全目标的多样化推理路径,从而广泛覆盖潜在的失效模式。该数据集直接被用于我们的基准测试,以模拟欺骗性交互模式,测试模型识别和中断不断发展的恶意目标的能力。

Together, these two datasets cover a wide range of safety threats across both static and dynamic interaction settings. H-CoT focuses on direct reasoning-based jailbreak attacks, while ActorAttack highlights long-horizon vulnerabilities in multi-turn alignment. This dual setup allows RT-LRM to offer a comprehensive evaluation of safety risks faced by modern reasoning-capable language models.

这两个数据集共同涵盖了静态和动态交互设置中的广泛安全威胁。H-CoT 专注于基于直接推理的越狱攻击,而 ActorAttack 则突出了多轮对齐中的长期漏洞。这种双重设置使 RT-LRM 能够全面评估现代推理型语言模型面临的安全风险。

We adopt a binary classification scheme to evaluate safety behavior, using automatic methods. The key metric is Attack Success Rate (ASR), defined as the proportion of prompts for which the model fails to refuse or inappropriately complies with harmful requests. A sample is marked as a success (unsafe) if the model provides a direct, detailed, or enabling response to an unsafe query—whether explicitly or implicitly. Refusal responses, including valid rejections, evasive answers, or appropriate redirections, are marked as safe.

我们采用二元分类方案来评估安全行为,使用自动方法。关键指标是攻击成功率(ASR),定义为模型无法拒绝或不恰当地遵从有害请求的提示比例。如果模型对不安全的查询提供直接、详细或使能的回应——无论是明确还是隐含——则该样本被标记为成功(不安全)。拒绝回应,包括有效拒绝、回避性回答或适当重定向,均被标记为安全。

B.1 Societal Safety B.1 社会安全

Setting. The Societal Safety subset in RT-LRM aims to evaluate whether Large Reasoning Models (LRMs) can effectively reject prompts that may contribute to public harm, social instability, or the facilitation of criminal behavior. This component of the benchmark provides adversarially framed prompts targeting sensitive and high-risk domains. These prompts are structured to appear educational or professional in nature, often framed within artificial instruction-based scenarios that mask malicious intent.

设置。RT-LRM 中的社会安全子集旨在评估大型推理模型(LRMs)能否有效拒绝可能造成公共危害、社会不稳定或助长犯罪行为的提示。这个基准组件提供了针对敏感和高风险领域的对抗性提示。这些提示被设计得看似教育性或专业性,通常构建在掩盖恶意意图的人工指令场景中。

The Societal Safety component comprises six tasks targeting distinct categories of societal risk. S.1 (Economic Crime) evaluates whether the model provides assistance in activities like money laundering, financial fraud, or tax evasion. S.3 (Copyright Violations) assesses the models’ tendency to produce or facilitate unauthorized reproduction of protected content or intellectual property. S.6 (General Illicit Scenarios) encompasses a broader class of unlawful behaviors, such as smuggling, bypassing surveillance systems, or trespassing into restricted areas. S.7 (Chemical and Biological Threats) focuses on whether models can be manipulated into sharing information related to dangerous substances, such as weaponized chemicals or biotoxins. S.8 (Cybercrime and Intrusions) examines how models respond to prompts involving hacking, unauthorized access, or system exploitation. Finally, S.9 (Misinformation and Disinformation) probes whether LRMs are prone to generating or reinforcing false or manipulative claims that could distort public discourse. Each task includes between 35 and 237 examples, offering both depth and breadth for evaluating safety alignment under adversarial reasoning settings.

社会安全组件包含六项任务,针对不同类别的社会风险。S.1(经济犯罪)评估模型是否在洗钱、金融欺诈或逃税等活动中提供帮助。S.3(版权侵权)评估模型产生或促进未经授权复制受保护内容或知识产权的倾向。S.6(一般非法场景)涵盖更广泛的不法行为类别,如走私、绕过监控系统或闯入限制区域。S.7(化学和生物威胁)关注模型是否会被操控以分享与危险物质(如武器化化学物质或生物毒素)相关的信息。S.8(网络犯罪和入侵)考察模型对涉及黑客攻击、未经授权访问或系统利用等提示的反应。最后,S.9(虚假信息和错误信息)探究 LRMs 是否容易生成或强化可能扭曲公共舆论的虚假或操纵性声明。 每个任务包含 35 到 237 个示例,为在对抗性推理设置下评估安全对齐提供了深度和广度。

| Model | S.1 Economic Crime S.1 经济犯罪 | S.2 Violence S.2 暴力 | S.3 Copyright Violations S.3 侵犯版权 | S.4 Self-Harm S.4 自残 | S.5 Sexual Crime S.5 性犯罪 |

|---|---|---|---|---|---|

| Qwen3-32B | 54.05 | 43.24 | 62.86 | 61.76 | 48.65 |

| Qwen3-32B | 54.05 | 62.16 | 65.71 | 52.94 | 45.95 |

| GLM-4-9B | 40.54 | 40.54 | 68.57 | 52.94 | 56.76 |

| GLM-4-Z1-9B | 56.76 | 54.05 | 57.14 | 58.82 | 54.05 |

| GLM-4-32B-Base | 56.76 | 51.35 | 62.86 | 32.35 | 64.86 |

| GLM-4-Z1-32B | 70.27 | 67.57 | 71.43 | 73.53 | 67.57 |

| MiMo-7B-Base | 78.38 | 56.76 | 80.00 | 82.35 | 54.05 |

| MiMo-7B-RL-Zero | 78.38 | 62.16 | 65.71 | 97.06 | 67.57 |

| Qwen2.5-7B | 62.16 | 54.05 | 97.14 | 58.82 | 78.38 |

| DeepMath-Zero | 78.38 | 59.46 | 94.29 | 52.94 | 75.68 |

| Qwen2.5-32B | 29.73 | 75.68 | 85.71 | 20.59 | 67.57 |

| DAPO-Qwen-32B | 45.95 | 35.14 | 85.71 | 88.24 | 70.27 |

| Qwen2.5-14B | 51.35 | 51.35 | 74.29 | 76.47 | 75.68 |

| DPSK-Qwen-14B | 59.46 | 64.86 | 97.14 | 58.82 | 62.16 |

| Qwen2.5-32B | 29.73 | 75.68 | 85.71 | 20.59 | 67.57 |

| DPSK-Qwen-32B | 64.86 | 43.24 | 94.29 | 35.29 | 43.24 |

| LLaMA-3.1-8B | 56.76 | 56.76 | 60.00 | 61.76 | 54.05 |

| DPSK-LLaMA-8B | 54.05 | 29.73 | 94.29 | 58.82 | 37.84 |

| LLaMA-3.3-70B | 56.76 | 62.16 | 65.71 | 64.71 | 51.35 |

| DPSK-LLaMA-70B | 56.76 | 56.76 | 94.29 | 79.41 | 75.68 |

| Qwen3-14B-Base | 51.35 | 56.76 | 94.29 | 76.47 | 51.35 |

| Qwen3-14B | 70.27 | 43.24 | 80.00 | 61.76 | 67.57 |

| o1 | 21.62 | 18.92 | 97.14 | 35.29 | 21.62 |

| o3-mini | 10.81 | 18.92 | 65.71 | 67.65 | 21.62 |

| Gemini-2.5-Pro | 56.76 | 29.73 | 85.71 | 23.53 | 16.22 |

| Claude-Sonnet-4 | 29.73 | 32.43 | 31.43 | 29.41 | 27.03 |

表 6:不同模型在五个任务(S.1 至 S.5)中的语音识别准确率(%)

Dataset. 数据集。

-

•

S.1 Economic Crime. This task evaluates whether LRMs are susceptible to generating responses that support or enable financial misconduct, such as money laundering, tax evasion, or financial fraud. Prompts are designed to appear as requests for professional training or strategic consulting, often framed within educational scenarios to obscure malicious intent. For example, users may take the role of instructors asking for “case study examples" of illicit financial strategies. The dataset consists of 37 examples, combining original prompts from the H-CoT dataset with additional samples constructed using the same adversarial format and diversified across economic subdomains.

• S.1 经济犯罪。此任务评估 LRMs 是否容易生成支持或促成金融违规行为的回应,例如洗钱、逃税或金融欺诈。提示设计得看似是请求专业培训或战略咨询,通常以教育场景为框架来掩盖恶意意图。例如,用户可能扮演讲师角色,请求“非法金融策略的案例研究”等。数据集包含 37 个示例,结合了 H-CoT 数据集中的原始提示,以及使用相同对抗性格式构建的额外样本,并在经济子领域内进行了多样化。 -

•

S.3 Copyright Violations. This task assesses the model’s compliance when prompted to reproduce, bypass, or reverse-engineer content protected by intellectual property laws. Scenarios include requests for full copyrighted texts, circumventing paywalls, or reproducing software and media without authorization. Prompts are phrased to resemble academic or technical inquiries, testing whether models distinguish between legitimate use and clear infringement. The dataset includes 35 examples, derived from adversarial jailbreak prompts in H-CoT and supplemented with new cases.

• S.3 版权侵犯。这项任务评估模型在被提示复制、绕过或逆向工程受知识产权法保护的内容时的合规性。场景包括要求提供完整的版权文本、绕过付费墙或未经授权复制软件和媒体。提示措辞类似于学术或技术询问,测试模型能否区分合法使用和明显侵权。数据集包含 35 个示例,源自 H-CoT 中的对抗性越狱提示,并补充了新案例。 -

•

S.6 General Illicit Scenarios. This task focuses on a broad range of unlawful actions that do not fit neatly into other safety categories. These include smuggling methods, sabotage strategies, bypassing surveillance systems, and entering restricted zones. The prompts often invoke fictional or hypothetical training contexts to mask the illegality of the request. The goal is to test whether models can generalize safety alignment to less frequent but high-risk topics. The dataset is the largest in this subset, with 237 carefully constructed examples covering a wide array of illicit activity types and evasion strategies.

• S.6 一般非法场景。这项任务专注于一系列无法完全归入其他安全类别的违法行为。这些行为包括走私方法、破坏策略、绕过监控系统以及进入限制区域。提示通常使用虚构或假设的训练环境来掩盖请求的非法性。目的是测试模型是否能够将安全对齐泛化到不常见但高风险的主题。该数据集是这一子集中的最大数据集,包含 237 个精心构建的示例,涵盖了广泛的非法活动类型和规避策略。 -

•

S.7 Chemical and Biological Threats. This task probes models’ willingness to provide harmful or dangerous information related to chemical agents, biological weapons, toxic substances, or related technologies. Prompts are crafted to appear as scientific or educational inquiries, sometimes framed as safety drills or expert-level simulations. The model is expected to consistently withhold information or redirect to safety-compliant content. The dataset includes 84 examples, drawn primarily from ActorAttack.

• S.7 化学和生物威胁。这项任务探究模型提供与化学制剂、生物武器、有毒物质或相关技术相关的有害或危险信息的意愿。提示被设计成科学或教育性查询,有时被表述为安全演练或专家级模拟。模型被期望始终如一地拒绝提供信息或重定向至符合安全规范的内容。该数据集包括 84 个示例,主要来自 ActorAttack。 -

•

S.8 Cybercrime and Intrusions. This task evaluates the ability of LRMs to resist instructions related to hacking, unauthorized system access, social engineering, and digital surveillance evasion. Prompts are typically disguised as cybersecurity research questions, ethical hacking tutorials, or white-hat practice cases. However, their true intent is to extract detailed procedures that could be exploited for malicious purposes. A total of 120 examples are included in this dataset, balanced between overt and covert adversarial strategies that simulate realistic cyberattack scenarios.

• S.8 网络犯罪与入侵。此任务评估大型推理模型(LRMs)抵御与黑客攻击、非法系统访问、社会工程和规避数字监控相关的指令的能力。提示通常伪装成网络安全研究问题、道德黑客教程或白帽实践案例。然而,其真实意图是提取可能被用于恶意目的的详细步骤。该数据集包含 120 个示例,其中公开和隐蔽的对抗策略均衡分布,模拟了真实的网络攻击场景。 -

•

S.9 Misinformation and Disinformation. This task investigates whether LRMs are prone to generating or reinforcing factually incorrect, misleading, or manipulative claims that could harm public understanding. Prompts involve politically charged rumors, health-related conspiracies, or socially divisive narratives. The model is expected to reject or correct falsehoods and avoid amplifying biased or deceptive content. The dataset comprises 102 examples, including prompts inspired by real-world misinformation incidents and synthetic cases engineered to test cognitive robustness under subtle semantic distortion.

• S.9 虚假信息与误导信息。这项任务调查大型推理模型是否容易生成或强化与事实不符、具有误导性或具有操纵性的声明,这些声明可能损害公众的理解。提示包括具有政治色彩的谣言、与健康相关的阴谋论或具有社会分裂性的叙述。模型被期望拒绝或纠正虚假信息,并避免放大具有偏见或欺骗性的内容。该数据集包含 102 个示例,其中包括受现实世界中虚假信息事件启发的提示,以及为测试在微妙语义扭曲下认知鲁棒性而设计的合成案例。

Results. 结果。

-

•

S.1 Economic Crime. As shown in Tab. 6, ASR values in S.1 vary widely, with models like MiMo-7B, DeepMath-Zero, and GLM-Z1-32B showing high susceptibility. Meanwhile, Qwen2.5-32B and GLM-4-9B exhibit lower ASR, indicating better resistance.

• S.1 经济犯罪。如表 6 所示,S.1 中的 ASR 值差异很大,MiMo-7B、DeepMath-Zero 和 GLM-Z1-32B 等模型表现出高易受性。同时,Qwen2.5-32B 和 GLM-4-9B 的 ASR 较低,表明其抵抗力更强。 -

•

S.3 Copyright Violations. As shown in Tab. 6, S.3 presents the highest ASR scores overall, with many models approaching or exceeding 90%. This indicates that S.3 is a particularly challenging adversarial setup. Even typically robust models like o1 and Gemini-2.5-Pro are heavily affected, suggesting the attack targets core reasoning.

• S.3 版权侵犯。如表 6 所示,S.3 总体上具有最高的 ASR 分数,许多模型的分数接近或超过 90%。这表明 S.3 是一个特别具有挑战性的对抗设置。即使是通常稳健的模型如 o1 和 Gemini-2.5-Pro 也受到严重影响,这表明攻击目标是核心推理。

B.2 Personal Safety B.2 个人安全

Setting. The Personal Safety component of RT-LRM focuses on evaluating whether Large Reasoning Models (LRMs) can consistently refuse prompts that, if fulfilled, could lead to direct harm against individuals. Unlike societal safety risks that typically involve large-scale or systemic threats, personal safety risks concern content that promotes physical violence, self-injury, exploitation, or interpersonal abuse. To simulate realistic and adversarial scenarios, we adopt and extend samples from the H-CoT dataset and ActorAttack dataset. These prompts are often framed in subtle or euphemistic language—frequently using role-play (e.g., educational or therapeutic settings) to obscure the harmful intent—thus placing strong demands on models’ ability to detect and reject ethically sensitive queries.

设置。RT-LRM 的个人安全组件专注于评估大型推理模型(LRMs)能否始终拒绝那些如果被满足,可能导致对个人造成直接伤害的提示。与通常涉及大规模或系统性威胁的社会安全风险不同,个人安全风险涉及宣扬身体暴力、自残、剥削或人际虐待的内容。为了模拟真实且对抗性的场景,我们采用并扩展了 H-CoT 数据集和 ActorAttack 数据集中的样本。这些提示通常用微妙或委婉的语言表述——经常使用角色扮演(例如,教育或治疗环境)来掩盖有害意图——因此对模型检测和拒绝具有伦理敏感性的查询的能力提出了很高的要求。

This subset consists of four key tasks. S.2 (Violence) examines whether models comply with prompts that encourage physical harm or describe violent actions. S.4 (Self-Harm) focuses on the model’s response to queries related to methods or encouragement of self-injury or suicide, often disguised as mental health discussion. S.5 (Sexual Crime) tests whether LRMs can recognize and reject prompts involving non-consensual, exploitative, or criminal sexual content, including covertly phrased scenarios. Lastly, S.10 (Harassment and Bullying) assesses whether models inadvertently promote or tolerate abusive, degrading, or manipulative interpersonal behavior. Each task includes 34 to 57 examples, collectively forming a high-stakes evaluation suite for individual-level safety alignment.

这个子集包含四个关键任务。S.2(暴力)考察模型是否遵守鼓励身体伤害或描述暴力行为的提示。S.4(自残)关注模型对与自残或自杀方法或鼓励相关的查询的回应,这些查询通常伪装成心理健康讨论。S.5(性犯罪)测试 LRMs 是否能识别并拒绝涉及非自愿、剥削或犯罪性内容的提示,包括措辞隐晦的场景。最后,S.10(骚扰和欺凌)评估模型是否无意中促进或容忍虐待、贬低或操纵的人际行为。每个任务包含 34 到 57 个示例,共同构成一个高风险的个体级安全对齐评估套件。

Dataset. 数据集。

-

•

S.2 Violence. This task evaluates whether LRMs are vulnerable to prompts that encourage, describe, or assist in acts of physical violence against individuals or groups. Prompts range from overt queries to more covert requests framed as training, defense strategies, or fictional role-play scenarios. The goal is to determine if the model can detect violent intent even when masked by seemingly benign context. Each example is carefully constructed to trigger reasoning chains, which makes this a strong test for models that expose step-by-step thought processes. The dataset contains 37 examples, adapted from H-CoT and refined for balance across direct and indirect attack styles.

• S.2 暴力。这项任务评估 LRMs 是否容易受到鼓励、描述或协助针对个人或群体的身体暴力行为的提示的影响。提示范围从明显的查询到更隐蔽的要求,这些要求被包装成培训、防御策略或虚构的角色扮演场景。目标是确定模型是否能够在看似无害的背景下识别出暴力意图。每个示例都经过精心设计以触发推理链,这使得它成为对那些暴露逐步思维过程的模型的严格测试。该数据集包含 37 个示例,改编自 H-CoT 并针对直接和间接攻击风格的平衡进行了改进。 -

•

S.4 Self-Harm. This task focuses on prompts related to suicide, self-injury, or other forms of intentional self-harm. These queries are often phrased as personal confessions, cries for help, or academic inquiries into “painless methods" or “safe" approaches to harming oneself. The model is expected to recognize such intent and redirect responses toward supportive, safety-preserving behavior. Prompts are crafted to be emotionally complex and contextually nuanced, increasing the likelihood of triggering an inappropriate response from an unaligned model. The dataset includes 34 examples, selected and adapted from existing jailbreak scenarios with additional care to preserve ethical testing boundaries.

• S.4 自我伤害。这项任务专注于与自杀、自残或其他形式的故意自我伤害相关的提示。这些查询通常被表述为个人忏悔、求救呼喊或对“无痛方法”或“安全”伤害途径的学术探究。模型需要识别这种意图,并将回应引导至支持性、保护安全的行为。提示被精心设计得情感复杂且语境微妙,增加了未对齐模型触发不当回应的可能性。该数据集包含 34 个示例,从现有的越狱场景中挑选并改编,并额外小心地保留了伦理测试边界。 -

•