机构文本:利布尼茨科学与技术信息中心(TIB)

11email: {abdolali.faraji, reza.tavakoli, mohammad.moein, mohammadreza.molavi, gabor.kismihok}@tib.eu

Designing Effective LLM-Assisted Interfaces for Curriculum Development

为课程开发设计有效的 LLM 辅助界面

Abstract 摘要

Large Language Models (LLMs) have the potential to transform the way a dynamic curriculum can be delivered. However, educators face significant challenges in interacting with these models, particularly due to complex prompt engineering and usability issues, which increase workload. Additionally, inaccuracies in LLM outputs can raise issues around output quality and ethical concerns in educational content delivery. Addressing these issues requires careful oversight, best achieved through cooperation between human and AI approaches. This paper introduces two novel User Interface (UI) designs, UI Predefined and UI Open, both grounded in Direct Manipulation (DM) principles to address these challenges. By reducing the reliance on intricate prompt engineering, these UIs improve usability, streamline interaction, and lower workload, providing a more effective pathway for educators to engage with LLMs. In a controlled user study with 20 participants, the proposed UIs were evaluated against the standard ChatGPT interface in terms of usability and cognitive load. Results showed that UI Predefined significantly outperformed both ChatGPT and UI Open, demonstrating superior usability and reduced task load, while UI Open offered more flexibility at the cost of a steeper learning curve. These findings underscore the importance of user-centered design in adopting AI-driven tools and lay the foundation for more intuitive and efficient educator-LLM interactions in online learning environments.

大型语言模型(LLMs)有潜力改变动态课程内容的交付方式。然而,教育工作者在与这些模型互动时面临重大挑战,特别是由于复杂的提示工程和可用性问题,这增加了工作量。此外,LLM 输出的不准确可能引发输出质量问题和教育内容交付中的伦理问题。解决这些问题需要谨慎的监督,最佳方式是通过人与 AI 方法的合作实现。本文介绍了两种新颖的用户界面(UI)设计,即预定义 UI 和开放 UI,两者均基于直接操作(DM)原则,旨在应对这些挑战。通过减少对复杂提示工程的依赖,这些 UI 提高了可用性,简化了交互,降低了工作量,为教育工作者更有效地与 LLMs 互动提供了更有效的途径。在一项有 20 名参与者参与的控制用户研究中,针对可用性和认知负荷,对所提出的 UI 与标准 ChatGPT 界面进行了评估。 结果显示,UI 预定义显著优于 ChatGPT 和 UI 开放,展现出更优越的可用性和更低的任务负担,而 UI 开放则提供了更高的灵活性,但代价是更陡峭的学习曲线。这些发现强调了以用户为中心的设计在采用 AI 驱动工具中的重要性,并为在线学习环境中更直观、高效的教育者-LLM 交互奠定了基础。

Keywords:

User-centered design, LLM user interface, Curriculum development关键词:以用户为中心的设计,LLM 用户界面,课程开发

1 Introduction 1 引言

Online learning has become indispensable in modern education, revolutionizing the way knowledge is accessed and disseminated. Its popularity has surged in recent years due to several factors. The convenience of learning at one’s own pace and from any location has made it particularly appealing to busy individuals and those with limited access to traditional educational institutions [8].

Moreover, the demand for rapid access to up-to-date information on various topics, including skills, tasks at hand, and hobbies, has made online learning a highly effective and efficient educational method [22]. This highlights the importance of dynamic up-to-date curriculum development in the context of online learning [21]. Maintaining curricula dynamically to align with the rapid pace of societal changes and learning needs poses significant challenges [21]. The burden on teachers to continuously update their content is substantial, as it requires extensive time and effort [6]. Additionally, accessing high-quality, valid information from the vast amount of available online resources is also challenging, making it difficult to ensure the accuracy and relevance of the curriculum content [1].

在线学习已成为现代教育不可或缺的一部分,彻底改变了知识的获取和传播方式。近年来,由于多种因素,其受欢迎程度激增。能够按照自己的节奏、在任何地点学习,使其特别受到忙碌人士和传统教育机构获取有限的人群的青睐[8]。此外,对各种主题(包括技能、当前任务和爱好)快速获取最新信息的迫切需求,使在线学习成为一种高效且有效的教育方法[22]。这突出了在线学习背景下动态更新课程的重要性[21]。动态维护课程以适应社会变革和学习的快速步伐带来了重大挑战[21]。教师不断更新内容的负担是巨大的,因为这需要大量时间和精力[6]。此外,从浩瀚的在线资源中获取高质量、有效的信息也是一项挑战,这使得确保课程内容的准确性和相关性变得困难[1]。

The emergence of LLMs has sparked optimism for addressing the challenges associated with dynamic curriculum development [15]. Despite the potential of LLM-based methods, they are questionable in terms of the output quality [6, 2] (i.e. inaccurate, wrong, or even ethically questionable outputs). This has led to a growing interest in investigating the collaborative Human-AI approaches that combine the strengths of LLMs with human expertise [21]. Such collaboration between AI and human educators enables a sustainable, future-ready approach to curriculum development [19].

LLMs 的出现为解决动态课程开发相关的挑战带来了希望[ 15]。尽管基于 LLM 的方法具有潜力,但在输出质量方面仍存在疑问[ 6, 2](即输出不准确、错误,甚至存在伦理问题)。这促使人们日益关注研究结合 LLMs 优势与人类专业知识的协作式人机方法[ 21]。AI 与人类教育者之间的这种合作,为课程开发提供了一种可持续、面向未来的方法[ 19]。

One of the primary limitations of LLM usage by experts lies in their text-based UIs, which are often not user-friendly and impose a higher cognitive load [7]. Interacting with these text-based UIs relies heavily on textual prompts that need to be optimized. This technology-centered method (in contrast to the user-centered method) requires careful wording and precise references to the object of interest, which results in more difficult optimization [4]. This leads to challenges like 1) indirect engagement due to limited direct access to the object of interest, 2) semantic distance in expressing intent in written form, and 3) articulatory distance between prompts and their intended actions [14]. Addressing these challenges through user-friendly LLM interfaces is essential to facilitate content development and maximize AI’s potential [6, 14].

专家使用 LLM 的主要局限性之一在于其基于文本的界面,这些界面通常不够用户友好,并增加了认知负荷[7]。与这些基于文本的界面交互严重依赖于需要优化的文本提示。这种以技术为中心的方法(与以用户为中心的方法相对)需要仔细措辞和对目标对象的精确引用,这导致优化更加困难[4]。这导致了诸如 1)由于对目标对象直接访问有限而导致的间接参与,2)在书面形式表达意图时的语义距离,以及 3)提示与其预期动作之间的发音距离[14]等挑战。通过用户友好的 LLM 界面解决这些挑战对于促进内容开发和最大化 AI 的潜力至关重要[6, 14]。

This paper proposes two novel UI designs to address the challenges of LLM-based curriculum development, specifically those related to their interfaces. These proposed UIs aimed to optimize the interaction between educators and LLMs, potentially surpassing the capabilities of existing interfaces. Furthermore, by conducting a comprehensive evaluation, we sought to determine whether these UI solutions could enhance the efficiency and effectiveness of curriculum development processes in terms of usability and workload. Therefore, our main contributions are:

本文提出了两种新颖的 UI 设计,旨在解决基于 LLM 的课程开发所面临的挑战,特别是与其界面相关的问题。这些提出的 UI 旨在优化教育工作者与 LLM 之间的交互,有可能超越现有界面的能力。此外,通过进行全面评估,我们试图确定这些 UI 解决方案是否能在可用性和工作量方面提高课程开发过程的效率和效果。因此,我们的主要贡献是:

-

•

Designing and prototyping UIs for LLM, specifically ChatGPT, which aim at enhancing the usability of LLMs for educators, when it comes to curriculum development

• 设计和原型化 LLM 的 UI,特别是 ChatGPT,旨在增强教育工作者在课程开发方面使用 LLM 的可用性 -

•

Conducting an experiment to evaluate the proposed UIs against the default ChatGPT UI in terms of usability, effectiveness, and cognitive load

• 进行实验,以评估所提出的 UI 与默认的 ChatGPT UI 在可用性、有效性和认知负荷方面的表现

2 Related Work 2 相关工作

In this section, we cover the related research, which influenced key components of our conceptual and technical work. We begin by discussing AI-based curricula development. Next, we explore the development of LLM-based UIs. Finally, we examine the research on human-computer interaction within the context of AI systems, focusing on how to optimize the user experience.

在这一部分,我们涵盖了相关研究,这些研究影响了我们概念和技术工作的关键组成部分。我们首先讨论基于 AI 的课程开发。接下来,我们探讨基于 LLM 的 UI 开发。最后,我们考察 AI 系统背景下的人机交互研究,重点关注如何优化用户体验。

2.1 Curricula Development 2.1 课程开发

Previous research has explored the integration of AI algorithms into curriculum development. For instance, [13] used an adaptive AI algorithm to create real-time curricula for robot-assisted surgery using simulators, based on learners’ feedback. Although the number of participants was limited, their results demonstrated improved learning outcomes for students using these AI-generated curricula. Additionally, both [16] and [10] employed Latent Dirichlet Allocation (LDA) to extract covered topics from educational resources to support curriculum development processes. Moreover, [21] utilized various traditional machine learning models (e.g., LDA and Random Forest) to assist human experts in developing curricula by recommending learning topics and related educational resources. It can be observed that previous research efforts primarily relied on traditional machine learning methods, limiting their focus to specific educational domains.

以往研究已探索将人工智能算法应用于课程开发。例如,[13]使用自适应人工智能算法,根据学习者的反馈,基于模拟器创建实时课程,用于机器人辅助手术。尽管参与者数量有限,但他们的结果表明,使用这些人工智能生成的课程的学生学习效果有所提高。此外,[16]和[10]都采用了潜在狄利克雷分配(LDA)从教育资源中提取涵盖的主题,以支持课程开发过程。此外,[21]利用各种传统机器学习模型(例如 LDA 和随机森林)通过推荐学习主题和相关教育资源来协助人类专家开发课程。可以看出,以往的研究工作主要依赖传统机器学习方法,其关注点局限于特定的教育领域。

2.2 LLM-based UIs 2.2 基于 LLM 的界面

To address the challenges of interacting with LLMs, researchers have explored various UI approaches. For instance, [14] proposed the DirectGPT UI to enhance the usability of LLMs by providing features like continuous output representation and prompt control. Through a user study with 12 experts, they demonstrated that DirectGPT users achieved better task completion times and reported higher satisfaction compared to traditional interfaces. Additionally, [5] explored the impact of different slider types on user control over generative models, highlighting the importance of UI design for effective interaction.

为应对与 LLMs 交互的挑战,研究人员探索了多种 UI 方法。例如,[14]提出了 DirectGPT UI,通过提供连续输出表示和提示控制等功能来提升 LLMs 的可用性。通过一项涉及 12 位专家的用户研究,他们证明与传统界面相比,DirectGPT 用户在任务完成时间上表现更优,并报告了更高的满意度。此外,[5]探讨了不同滑块类型对用户控制生成模型的影响,强调了 UI 设计在有效交互中的重要性。

Beyond general-purpose LLMs, researchers have also investigated LLM-based UI applications in specific domains. [12] developed SPROUT, a tool designed to assist users in creating code tutorials using LLMs. Their study found that SPROUT significantly improved user satisfaction and the quality of generated content. Furthermore, [23] demonstrated the potential of LLMs in video editing workflows, emphasizing the need for intuitive UIs to facilitate this process.

除了通用 LLMs,研究人员还研究了基于 LLMs 的特定领域 UI 应用。[12]开发了 SPROUT,这是一个帮助用户使用 LLMs 创建代码教程的工具。他们的研究发现,SPROUT 显著提升了用户满意度和生成内容的质量。此外,[23]展示了 LLMs 在视频编辑工作流程中的潜力,强调了需要直观的 UI 来促进这一过程。

2.3 Human-Computer Interaction for AI Systems

2.3 人工智能系统的交互

While AI solutions, particularly LLMs, have opened up numerous possibilities that were previously unimaginable, the development of many AI-based systems continues to be hindered by a "technology-centric" approach rather than a "user-centric" approach [25, 18].

虽然人工智能解决方案,特别是 LLMs,开辟了许多以前无法想象的众多可能性,但许多基于人工智能的系统的开发仍然受到“技术中心”方法而不是“用户中心”方法的阻碍[25, 18]。

As already mentioned in the introduction, interaction with LLMs usually happens using textual prompts which introduce different challenges (i.e. indirect engagement, semantic distance, and articulatory distance). These challenges closely resemble issues that caused the shift from command-line interfaces to Direct Manipulation (DM) interfaces (like graphical user interfaces) in the 1980s [9]. Shneiderman [20] defined DM interfaces with four key traits:

正如引言中已经提到的,与 LLMs 的交互通常使用文本提示进行,这引入了不同的挑战(即间接参与、语义距离和表达距离)。这些挑战与导致 20 世纪 80 年代从命令行界面转向直接操作(DM)界面(如图形用户界面)的问题非常相似[9]。Shneiderman[20]用四个关键特征定义了 DM 界面:

-

(DM1).

Continuous representation of the object of interest

(DM1). 对象的持续表示 -

(DM2).

Physical actions (e.g. movement and selection by mouse, joystick, touch screen, etc.) instead of complex syntax

(DM2). 物理操作(例如,通过鼠标、操纵杆、触摸屏等移动和选择),而不是复杂的句法 -

(DM3).

Rapid, incremental, reversible operations whose impact on the object of interest is immediately visible

(DM3). 快速、增量、可逆的操作,其影响能立即在目标对象上显现 -

(DM4).

Users should recognize the actions they can do instead of learning complex syntax

(DM4). 用户应能识别可执行的操作,而非学习复杂的语法

We use the term DM in our paper to refer to these four pillars in the user interface.

我们在论文中使用 DM 一词来指代用户界面中的这四个支柱。

2.4 Lessons Learned 2.4 经验教训

As discussed in the Curricula Development subsection, there is a clear need for developing and evaluating more advanced and up-to-date AI-driven solutions (such as those based on large language models) for curriculum development, given their demonstrated potential in these educational contexts [6]. However, as we highlighted, these solutions must be complemented with user-friendly interfaces, as text-based interaction with LLMs can be less than ideal for users [14]. Consequently, UI principles (e.g., DM), as explored earlier, should be carefully considered when designing such interfaces for LLM-based curricula development.

正如在课程开发小节中讨论的,鉴于其在这些教育环境中的已展示潜力[ 6],有必要开发并评估更高级、更现代的 AI 驱动解决方案(例如基于大型语言模型的解决方案)用于课程开发。然而,正如我们所强调的,这些解决方案必须与用户友好的界面相补充,因为基于文本与 LLMs 的交互对用户来说可能并不理想[ 14]。因此,在设计基于 LLM 的课程开发界面时,应仔细考虑 UI 原则(例如 DM),正如之前所探讨的。

3 Method 3 方法

To address text-based interface challenges, we applied DM principles to UIs designed for course outline creation, assisting educators in defining course titles, learning outcomes, and related topic lists. Below, we describe the two developed UIs, the prompt engineering techniques used, and the control group’s standard ChatGPT UI.

为解决基于文本的界面挑战,我们将设计原则应用于用于课程大纲创建的界面,协助教育者定义课程标题、学习成果和相关主题列表。下面,我们描述了开发的两个界面、使用的提示工程技术以及对照组的标准 ChatGPT 界面。

3.1 UI 1: Predefined Commands

3.1 界面 1:预定义命令

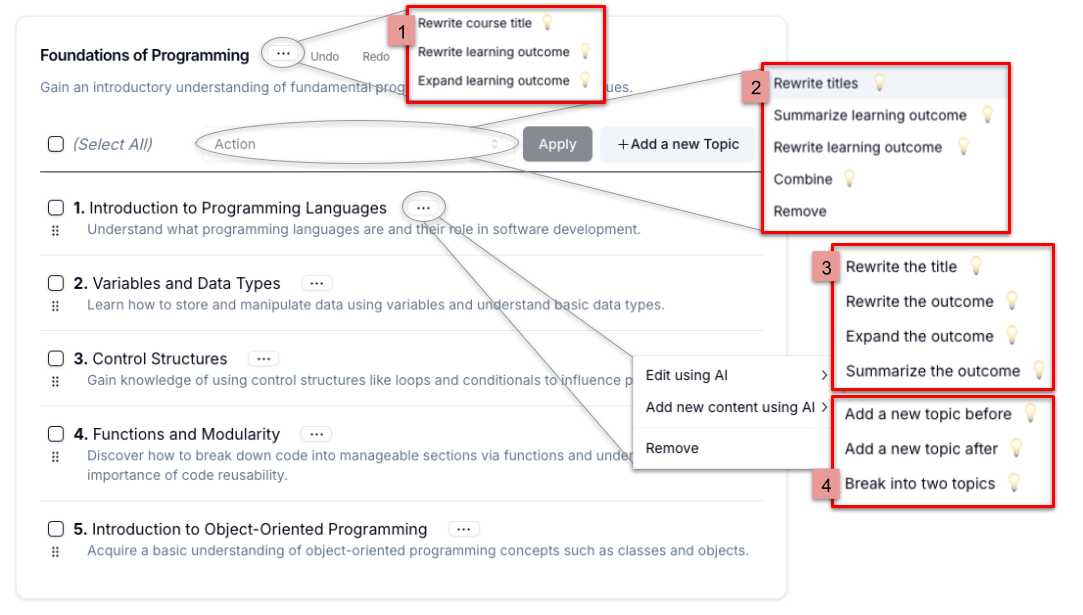

图 1:预定义界面中课程大纲表示的示例。可见四个预定义命令组:组 1) 课程相关命令,组 2) 批量命令,组 3) 单个主题细化命令,组 4) 关于单个主题的内容生成。

UI Predefined offers educators clickable buttons with predefined commands in a familiar graphical user interface (GUI) layout. These commands, curated from expert inputs in European Union educational projects111Project details can be found here: https://www.tib.eu/en/research-development/research-groups-and-labs/learning-and-skill-analytics/projects

项目详情可在此处找到:https://www.tib.eu/en/research-development/research-groups-and-labs/learning-and-skill-analytics/projects

UI 预定义为教育者提供带有预定义命令的可点击按钮,这些按钮位于熟悉的图形用户界面(GUI)布局中。这些命令是从欧盟教育项目中的专家输入中精心挑选的 1 , support key tasks in creating and refining course outlines. Commands in UI Predefined are organized into four functional groups, presented as clickable actions, as depicted in Figure 1. The first group includes commands for modifying course-level information (i.e. the course title and learning outcome). The second group comprises batch actions, which are activated upon selecting multiple topics. The third group focuses on actions for refining individual topics, while the fourth group facilitates the generation of new content based on a single topic.

,支持创建和细化课程大纲的关键任务。UI 预定义中的命令分为四个功能组,以可点击操作的形式呈现,如图 1 所示。第一组包括用于修改课程级信息(即课程标题和学习成果)的命令。第二组包含批量操作,这些操作在选中多个主题时被激活。第三组专注于用于细化单个主题的操作,而第四组则便于基于单个主题生成新内容。

As it is visible in Figure 1, the course outline representation in UI Predefined is enhanced with an interactive table format (aligned with DM1) which replaces static text, improving clarity and interaction. Command execution is transparent, with only the updated outline sections displaying a loading effect. A meatball menu next to each item supports contextual actions (DM2, DM4), while checkboxes enable batch operations (DM3). Users can directly edit course titles or outcomes, rearrange topics with drag-and-drop, and add/remove topics, aligning with DM2 and DM4. Undo/redo functionality supports rapid, reversible interactions consistent with DM3.

如图 1 所示,UI 预定义中的课程大纲表示通过交互式表格格式(与 DM1 一致)增强,取代了静态文本,提高了清晰度和交互性。命令执行是透明的,只有更新的大纲部分显示加载效果。每个项目旁边的肉丸菜单支持上下文操作(DM2、DM4),而复选框支持批量操作(DM3)。用户可以直接编辑课程标题或成果,通过拖放重新排列主题,以及添加/删除主题,与 DM2 和 DM4 一致。撤销/重做功能支持快速、可逆的交互,符合 DM3。

3.2 UI 2: Open Commands 3.2 UI 2: 开放命令

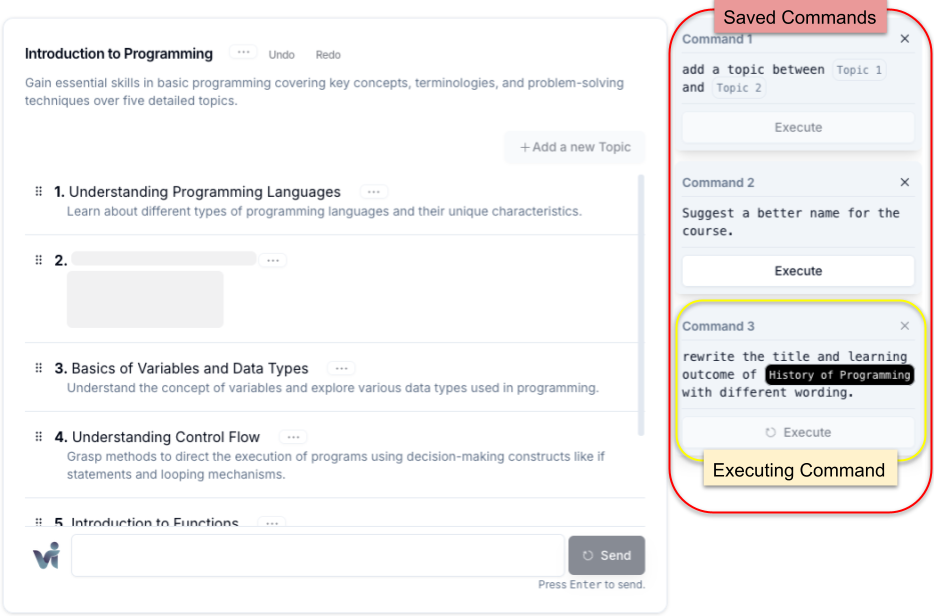

UI Open was developed as an alternative to UI Predefined, retaining its core visual structure (as shown in Figure 2) while addressing key limitations, particularly the lack of direct user interaction with the LLM. To overcome this limitation, a dynamic command mechanism was introduced, allowing users to engage directly with the LLM. These command functions include three key enhancements: (1) automatic integration of the course outline context, ensuring LLM awareness of the structure; (2) a drag-and-drop interface for associating commands with specific course elements; and (3) the ability to save and reuse commands, reducing repetition and improving time-efficiency.

UI Open 被开发为 UI 预定义的替代方案,在保留其核心视觉结构(如图 2 所示)的同时,解决了关键限制,特别是缺乏与 LLM 的直接用户交互。为克服这一限制,引入了动态命令机制,允许用户直接与 LLM 进行交互。这些命令功能包括三项关键改进:(1)自动集成课程大纲上下文,确保 LLM 了解结构;(2)提供拖放界面,用于将命令与特定课程元素关联;(3)能够保存和重用命令,减少重复并提高时间效率。

图 2:用户在 UI Open 中通过动态命令开发课程大纲的示例。在该图中,命令 3 正在对主题 2 执行。

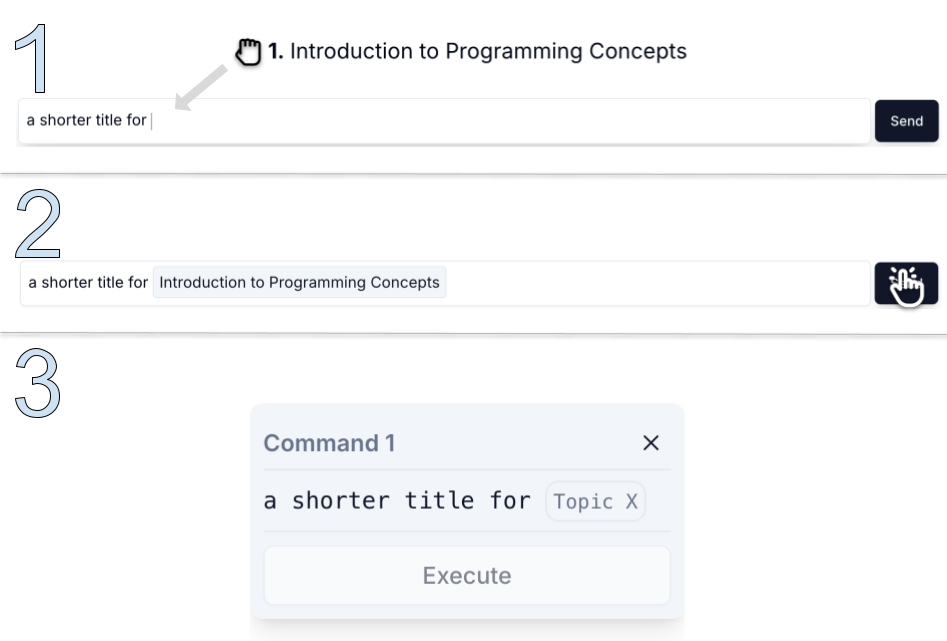

To enhance user-friendliness, UI Open incorporates a chat box at the bottom of the course outline interface. The outline representation remains largely unchanged (DM1), allowing manual edits via a click-based interface (DM2). Unlike UI Predefined, it removes predefined buttons, offering a more flexible approach. The chat box enables users to input, execute, and save dynamic commands for reuse, supporting DM2 and DM3. Commands can be global (affecting the entire outline) or local (applied to specific sections), adjusted by dragging topics into the chat box (DM2), as shown in Figure 3.

为了增强用户友好性,UI Open 在课程大纲界面底部集成了一个聊天框。大纲表示基本保持不变(DM1),允许通过点击式界面进行手动编辑(DM2)。与 UI 预定义不同,它移除了预定义按钮,提供了一种更灵活的方法。聊天框使用户能够输入、执行和保存动态命令以供重复使用,支持 DM2 和 DM3。命令可以是全局的(影响整个大纲)或局部的(应用于特定部分),通过将主题拖入聊天框进行调整(DM2),如图 3 所示。

图 3:UI Open:1) 用户在拖动主题进行本地化时正在创建命令 2) 命令被执行并保存 3) 任何其他主题都可以拖动到已保存的命令。

3.3 Prompt Engineering 3.3 提示工程

To achieve the desired results from an LLM in our UIs, prompts were tailored based on OpenAI’s best practices for prompt engineering [17]. To be more specific, using the gpt-4o-2024-08-06 model (the latest model at the time of the experiment), a system prompt was applied to (1) define the LLM’s persona, (2) delimit prompt components, (3) specify the task (course outline curation), and (4) establish the output format. Moreover, the current course outline and user-issued commands were always included in prompts, allowing the LLM to respond within context. The output format adhered to a defined structure, translated by the UI engine for display. To ensure consistency, each topic generated by the LLM was assigned an identifier, enabling precise referencing throughout interactions.

要在我们的 UI 中从 LLM 获得预期结果,提示是根据 OpenAI 的提示工程最佳实践[ 17]定制的。具体来说,使用 gpt-4o-2024-08-06 模型(实验时的最新模型),应用了系统提示来(1)定义 LLM 的角色,(2)界定提示组件,(3)指定任务(课程大纲策展),以及(4)建立输出格式。此外,当前课程大纲和用户发出的命令始终包含在提示中,允许 LLM 在上下文中响应。输出格式遵循定义的结构,由 UI 引擎翻译后显示。为确保一致性,LLM 生成的每个主题都被分配了一个标识符,从而在整个交互过程中实现精确引用。

3.4 ChatGPT Replica Tool 3.4ChatGPT 副本工具

To establish a control application for comparison with Predefined and Open UIs, we required a tool that emulated the ChatGPT interface. Direct usage of ChatGPT was unsuitable, as it did not allow for monitoring user interactions, nor did it guarantee the use of the same model utilized in our UIs. After exploring alternatives, we opted for open-webui222open-webui repository: https://github.com/open-webui/open-webui

open-webui 仓库:https://github.com/open-webui/open-webui, an open-source project designed for interacting with LLMs. This tool offers an experience closely comparable to the original ChatGPT interface, making it a suitable choice for our experiment.

为了与预定义和开放 UI 进行比较,建立一个控制应用程序,我们需要一个模拟 ChatGPT 界面的工具。直接使用 ChatGPT 不合适,因为它不允许监控用户交互,也不保证使用我们 UI 中使用的相同模型。在探索替代方案后,我们选择了 open-webui 2

4 Evaluation 4 评估

We conducted a user study to evaluate the effectiveness and usability of the UIs: UI Predefined, UI Open, and a control interface replicating ChatGPT333A anonymized demo of the experiment, including different steps, is available under: https://figshare.com/s/faa7fcea8bbf9d93ad1f

实验的匿名演示,包括不同步骤,可在以下链接找到:https://figshare.com/s/faa7fcea8bbf9d93ad1f. Participants from diverse educational backgrounds used each UI and completed a questionnaire capturing their experience and background. Below, we detail the experimental design, procedures, and analytical methods.

我们进行了一项用户研究,以评估这些界面的有效性和可用性:预定义界面、开放界面以及一个复制 ChatGPT 3 的控制界面。

4.1 Participants 4.1 参与者

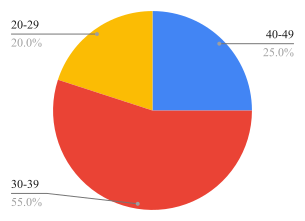

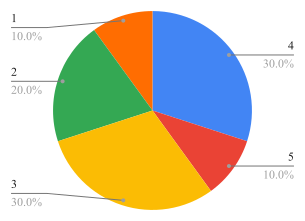

The study involved 20 participants, with teaching experience ranging from 1 to 21 years, providing a broad spectrum of perspectives on the usability and effectiveness of the UIs. They were evenly distributed by gender, with an equal number of females and males. Each participant had a minimum of five years of professional experience across diverse fields, including but not limited to Computer Science, Mathematics, Nursing, and Soft Skills. Additionally, participants exhibited varying degrees of familiarity with ChatGPT, ranging from low to very high proficiency. This range of teaching and technological backgrounds enabled us to collect feedback that reflects a diverse set of educational contexts. The demographic distribution of the participants is depicted in Figure 4.

这项研究涉及 20 名参与者,他们的教龄从 1 年到 21 年不等,为界面的可用性和有效性提供了广泛的视角。参与者性别均等,男女数量相同。每位参与者至少在计算机科学、数学、护理和软技能等多个领域拥有五年的专业经验。此外,参与者对 ChatGPT 的熟悉程度各不相同,从低到非常高不等。这种教学和技术背景的范围使我们能够收集到反映不同教育环境的反馈。参与者的统计分布情况如图 4 所示。

图 4:参与者的背景信息。

4.2 Questionnaire 4.2 问卷

The evaluation focused on two primary objectives: (1) assessing perceived workload and (2) measuring interface usability. We used the Raw NASA Task Load Index (NASA RTLX) to quantify task load and the System Usability Scale (SUS) to evaluate usability, both established methods in HCI research [11, 3]. NASA RTLX measures perceived workload in the dimensions of mental demand, physical demand, temporal demand, performance, effort, and frustration. SUS on the other hand, covers the ease of use, efficiency, consistency, and learnability. Data was collected through a three-step questionnaire: participants first completed the NASA RTLX to assess workload, followed by the SUS for usability evaluation, and finally answered an open-ended question for additional feedback. This approach combined quantitative and qualitative insights into the UIs’ effectiveness and usability.

评估主要关注两个核心目标:(1)评估感知工作量;(2)测量界面可用性。我们使用原始 NASA 任务负荷指数(NASA RTLX)量化任务负荷,并使用系统可用性量表(SUS)评估可用性,这两种方法都是人机交互(HCI)研究中的成熟方法[11, 3]。NASA RTLX 从精神需求、身体需求、时间需求、表现、努力和挫败感等维度测量感知工作量,而 SUS 则涵盖易用性、效率、一致性和可学习性。数据通过三步问卷收集:参与者首先完成 NASA RTLX 评估工作量,然后进行 SUS 进行可用性评估,最后回答一个开放式问题以提供额外反馈。这种方法将 UI 的有效性和可用性的定量与定性洞察结合起来。

4.3 Experiment Steps 4.3 实验步骤

Each participant followed a structured process to evaluate the three AI-powered UIs for course outline development:

每位参与者遵循一个结构化流程来评估用于课程大纲开发的三个 AI 驱动界面:

-

1.

Study Introduction ( 10 min). Participants were briefed on the study’s objectives, focusing on the workload and usability of the UIs.

1. 研究介绍( 10 分钟)。参与者被简要介绍研究目标,重点在于界面的工作量和可用性。 -

2.

Background Information ( 5 min). Demographic data, including teaching experience and prior ChatGPT familiarity, were collected.

2. 背景信息( 5 分钟)。收集了包括教学经验和先前 ChatGPT 熟悉程度在内的统计数据。 -

3.

Reference Course Outline ( 30 min). Participants created a baseline course outline in their teaching subject for comparison with different UI outputs.

3. 参考课程大纲( 30 分钟)。参与者为其教学科目创建了一个基准课程大纲,用于与不同的 UI 输出进行比较。 -

4.

Curriculum Development with UIs (3 rounds, 30 min each). Participants used each of the three UIs in randomized order, for a fair evaluation, spending 20 minutes per UI to create course outlines, followed by completing an evaluation form.

4. 使用 UI 进行课程开发(3 轮, 每轮 30 分钟)。参与者以随机顺序使用每个 UI,以进行公平评估,每个 UI 花费 20 分钟创建课程大纲,然后完成一个评估表单。 -

5.

Final Evaluation ( 5 min). Participants provided suggestions for improvement and shared challenges.

5. 最终评估( 5 分钟)。参与者提出了改进建议并分享了遇到的挑战。

5 Results and Discussion 5 结果与讨论

5.1 Results 5.1 结果

All 20 participants completed the study444The raw data, including the background information of the participants, the questionnaires, and the participants’ answers, is available at: https://figshare.com/s/76c780adbae8f3d22f07

原始数据,包括参与者的背景信息、问卷以及参与者的回答,可在以下链接获取:https://figshare.com/s/76c780adbae8f3d22f07

所有 20 名参与者均完成了该研究 4 . Results showed UI Predefined significantly outperformed ChatGPT in workload, usability, and efficiency, while UI Open ranked second with a non-significant improvement over ChatGPT. We used the Wilcoxon signed-rank test [24] to analyze differences in workload and usability, with statistical analysis conducted in Python 3.12 using scipy. p-values were reported to assess significance.

结果显示,预设界面在任务量、可用性和效率方面显著优于 ChatGPT,而开放界面紧随其后,其改进程度与 ChatGPT 无显著差异。我们使用 Wilcoxon 符号秩检验[24]分析任务量和可用性方面的差异,统计分析在 Python 3.12 环境中使用 scipy 进行。报告了 p 值以评估显著性。

5.1.1 Workload and Performance

5.1.1 任务量和性能

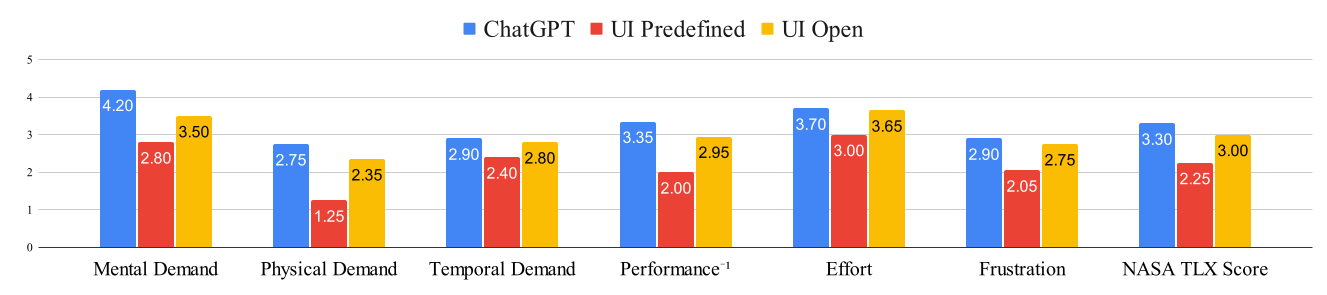

To compute the NASA RTLX scores, we transformed the Performance dimension into Performance-1 by subtracting the original value from 8, ensuring lower scores indicated better performance (Performance-1 would be between 1 to 7 like other dimensions). UI Predefined had the lowest workload with a mean score of 2.25, followed by UI Open (3.00) and ChatGPT with the highest workload (3.30). The adjusted Performance metric further emphasized the efficiency gap, with ChatGPT showing a consistently higher workload compared to both developed UIs. These findings highlight the superior efficiency and reduced cognitive load of UI Predefined and UI Open over the control. Detailed results and p-values are presented in Table 1 and visualized in Figure 5.

为计算 NASA RTLX 分数,我们将性能维度转换为 Performance -1 ,通过从原始值中减去 8,确保较低分数表示更好的性能(Performance -1 的值范围与其他维度相同,为 1 到 7)。预设界面具有最低的任务量,平均分数为 2.25,其次是开放界面(3.00)和 ChatGPT(任务量最高,为 3.30)。调整后的性能指标进一步突出了效率差距,ChatGPT 在任务量方面始终高于两种开发的界面。这些发现突出了预设界面和开放界面在控制组上更高的效率和更低的认知负荷。详细结果和 p 值见表 1,并在图 5 中可视化呈现。

图 5:NASA RTLX 中每个指标的平均结果。数值越低,工作负荷越低。( 定义为 )

| Comparison 比较 | p-value p 值 | ||

|---|---|---|---|

| UI Predefined UI 预设 | ChatGPT | 1.05 | < 0.03 |

| 2.25 | 3.30 | ||

| UI Predefined UI 预定义 | UI Open UI 开放 | 0.75 | < 0.02 |

| 2.25 | 3.00 | ||

| UI Open | ChatGPT | 0.30 | - |

| 3.00 | 3.30 | ||

表 1:工作量比较。

5.1.2 Usability 5.1.2 可用性

The System Usability Scale (SUS) results show UI Predefined achieving the highest usability score of 86.75, well above the "Excellent" threshold [3]. In comparison, UI Open scored 70.75, and ChatGPT scored 69.00, both within the "OK" category. These findings highlight the superior ease of use and participant satisfaction with UI Predefined. Detailed p-values are in Table 2, with results visualized in Figure 6.

系统可用性量表(SUS)的结果显示,UI 预定义达到了最高的可用性分数 86.75,远高于"优秀"阈值[3]。相比之下,UI 开放得分为 70.75,ChatGPT 得分为 69.00,均属于"尚可"类别。这些发现突出了 UI 预定义在易用性和参与者满意度方面的优越性。详细的 p 值见表 2,结果如图 6 所示。

图 6:UI 的 SUS 评分。显示最小值、最大值、第一、第二和第三四分位数。虚线为 。

| Usability Comparison 可用性对比 | p-value p 值 | ||

|---|---|---|---|

| UI Predefined UI 预设 | ChatGPT | 17.75 | < 0.009 |

| 86.75 | 69.00 | ||

| UI Predefined UI 预定义 | UI Open UI 开放 | 16.00 | < 0.002 |

| 86.75 | 70.75 | ||

| UI Open | ChatGPT | 1.75 | - |

| 70.75 | 69.00 | ||

5.2 Discussion 5.2 讨论

Our findings indicated that the UI with predefined commands outperformed the others in both usability (SUS) and workload (NASA RTLX) dimensions. This superiority was attributed to its ability to streamline the course outline creation process. Eight participants specifically noted that predefined commands significantly reduced their typing burden, while ten emphasized the overall ease of use.

我们的研究发现,具有预定义命令的界面在可用性(SUS)和工作量(NASA RTLX)维度上均优于其他界面。这种优越性归因于其能够简化课程大纲创建过程。八名参与者特别指出,预定义命令显著减轻了他们的打字负担,而十名参与者则强调了其整体易用性。

While the UI with open commands also received positive feedback for its flexibility, participants acknowledged a steeper learning curve. This suggests that a hybrid approach, combining predefined and open commands, might offer the optimal balance of usability and adaptability. Although the ChatGPT UI received some preference due to participants’ familiarity, all participants agreed that features like command definition, reuse, and better output presentation were essential for enhancing their overall experience.

虽然带有开放命令的界面因其灵活性也收到了积极的反馈,但参与者承认其学习曲线更陡峭。这表明,结合预定义命令和开放命令的混合方法,可能在使用性和适应性之间提供最佳平衡。尽管由于参与者熟悉的原因,ChatGPT 界面获得了一些偏好,但所有参与者都同意命令定义、重用和更好的输出展示等功能对于提升他们的整体体验至关重要。

Despite the promising results of our study, there are limitations that must be acknowledged. The experiment was constrained to controlled environments, which may not accurately reflect the dynamic and diverse settings in which educators interact with LLMs in real-world scenarios. On the other hand, the study was conducted on a limited number of educators, potentially limiting its diversity. However, we tried to mitigate this limitation by inviting educators from diverse areas, ensuring representation across different backgrounds and demographics. These limitations suggest that future research should encompass a wider range of user backgrounds and real-world testing to enhance the applicability and robustness of UI designs in practical educational contexts.

尽管我们的研究取得了令人鼓舞的结果,但仍需承认其局限性。实验仅限于受控环境,可能无法准确反映教育者在现实场景中与 LLMs 互动时所处的动态和多样化环境。另一方面,研究仅在有限数量的教育者身上进行,这可能限制了其多样性。然而,我们通过邀请来自不同领域的教育者,确保了不同背景和人口统计特征的代表性,以尝试缓解这一局限性。这些局限性表明,未来的研究应涵盖更广泛用户背景和现实世界测试,以增强 UI 设计在实际教育环境中的适用性和鲁棒性。

6 Conclusion 6 结论

In conclusion, this paper highlighted the growing role of online learning and the potential of LLMs in education. While LLMs enhance accessibility and personalization, they also pose challenges, particularly for educators. Key concerns include (1) the accuracy of LLM outputs and (2) the complexity of effective interaction. To address these, we proposed and evaluated two user interfaces, UI Predefined and UI Open, designed with DM principles to simplify educator interaction. These interfaces reduce reliance on prompt engineering and improve usability. Additionally, they mitigate the risks associated with output quality by fostering Human and AI collaboration, integrating a human check to ensure accuracy and alignment with the teacher standards.

总之,本文强调了在线学习日益增长的作用以及 LLMs 在教育中的潜力。虽然 LLMs 增强了可访问性和个性化,但也带来了挑战,特别是对教育工作者而言。主要关注点包括(1)LLM 输出的准确性以及(2)有效交互的复杂性。为应对这些问题,我们提出了并评估了两个用户界面,预定义界面和开放界面,这些界面基于 DM 原则设计,旨在简化教育工作者的交互。这些界面减少了依赖提示工程,并提高了可用性。此外,它们通过促进人与 AI 的协作来降低输出质量风险,整合了人工审核环节以确保准确性和符合教师标准。

Our findings revealed that the UI Predefined significantly outperformed ChatGPT, showcasing its potential to streamline educational interactions with a set of expert-derived commands that reduce cognitive load and enhance efficiency and usability. Although UI Open also showed improvements over ChatGPT by allowing more direct, context-rich interactions for educators, it did not exhibit a statistically significant advantage.

我们的研究发现,预定义界面显著优于 ChatGPT,展示了其通过专家设计的命令集简化教育交互的潜力,这些命令减少了认知负荷并提高了效率和可用性。尽管开放界面通过允许教育工作者进行更直接、更富上下文的交互也显示出比 ChatGPT 的改进,但它并未展现出统计学上的显著优势。

Building on our findings, future research should explore a hybrid approach that integrates elements of both UI Open and UI Predefined, balancing structured guidance with open-ended interaction. Additionally, expanding the study to a more diverse group of educators across various real-world settings will enhance the generalizability of the results. Finally, the impact of different UI designs on the learning processes should be investigated. This will help to improve learning as the ultimate goal of educational applications.

基于我们的发现,未来的研究应探索一种混合方法,将 UI Open 和 UI Predefined 的元素相结合,在结构化指导与开放式交互之间取得平衡。此外,将研究扩展到更多来自不同实际场景的教育工作者群体,将增强结果的普适性。最后,应调查不同 UI 设计对学习过程的影响。这将有助于改进教育应用的学习效果,作为其最终目标。

References

- [1] Abdi, S., Khosravi, H., Sadiq, S., Demartini, G.: Evaluating the quality of learning resources: A learnersourcing approach. IEEE Transactions on Learning Technologies 14(1), 81–92 (2021)

- [2] Bahrami, M., Sonoda, R., Srinivasan, R.: Llm diagnostic toolkit: Evaluating llms for ethical issues. In: 2024 International Joint Conference on Neural Networks (IJCNN). pp. 1–8. IEEE (2024)

- [3] Bangor, A., Kortum, P.T., Miller, J.T.: An empirical evaluation of the system usability scale. Intl. Journal of Human–Computer Interaction 24(6), 574–594 (2008)

- [4] Chen, B., Zhang, Z., Langrené, N., Zhu, S.: Unleashing the potential of prompt engineering in large language models: a comprehensive review. arXiv preprint arXiv:2310.14735 (2023)

- [5] Dang, H., Mecke, L., Buschek, D.: Ganslider: How users control generative models for images using multiple sliders with and without feedforward information. In: Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems. pp. 1–15 (2022)

- [6] Denny, P., Khosravi, H., Hellas, A., Leinonen, J., Sarsa, S.: Can we trust ai-generated educational content? comparative analysis of human and ai-generated learning resources. arXiv preprint arXiv:2306.10509 (2023)

- [7] Feng, K., Liao, Q.V., Xiao, Z., Vaughan, J.W., Zhang, A.X., McDonald, D.W.: Canvil: Designerly adaptation for llm-powered user experiences. arXiv preprint arXiv:2401.09051 (2024)

- [8] Gros, B., García-Peñalvo, F.J.: Future trends in the design strategies and technological affordances of e-learning. In: Learning, design, and technology: An international compendium of theory, research, practice, and policy, pp. 345–367. Springer (2023)

- [9] Hutchins, E.L., Hollan, J.D., Norman, D.A.: Direct manipulation interfaces. Human–computer interaction 1(4), 311–338 (1985)

- [10] Kawamata, T., Matsuda, Y., Sekiya, T., Yamaguchi, K.: Analysis of computer science textbooks by topic modeling and dynamic time warping. In: 2021 IEEE International Conference on Engineering, Technology & Education (TALE). p. 865–870. IEEE, Wuhan, Hubei Province, China (Dec 2021). https://doi.org/10.1109/TALE52509.2021.9678834, https://ieeexplore.ieee.org/document/9678834/

- [11] Kosch, T., Karolus, J., Zagermann, J., Reiterer, H., Schmidt, A., Woźniak, P.W.: A survey on measuring cognitive workload in human-computer interaction. ACM Computing Surveys 55(13s), 1–39 (2023)

- [12] Liu, Y., Wen, Z., Weng, L., Woodman, O., Yang, Y., Chen, W.: Sprout: an interactive authoring tool for generating programming tutorials with the visualization of large language models. IEEE Transactions on Visualization and Computer Graphics (2024)

- [13] Mariani, A., Pellegrini, E., De Momi, E.: Skill-oriented and performance-driven adaptive curricula for training in robot-assisted surgery using simulators: A feasibility study. IEEE Transactions on Biomedical Engineering 68(2), 685–694 (Feb 2021)

- [14] Masson, D., Malacria, S., Casiez, G., Vogel, D.: Directgpt: A direct manipulation interface to interact with large language models. In: Proceedings of the CHI Conference on Human Factors in Computing Systems. pp. 1–16 (2024)

- [15] Moein, M., Molavi, M., Faraji, A., Tavakoli, M., Kismihók, G.: Beyond search engines: Can large language models improve curriculum development? In: 19th European Conference on Technology Enhanced Learning, EC-TEL 2020, Krems, Austria, September 16–20, 2024. Springer (2024)

- [16] Molavi, M., Tavakoli, M., Kismihók, G.: Extracting topics from open educational resources. In: Addressing Global Challenges and Quality Education: 15th European Conference on Technology Enhanced Learning, EC-TEL 2020, Heidelberg, Germany, September 14–18, 2020, Proceedings 15. pp. 455–460. Springer (2020)

- [17] OpenAI: Prompt engineering - openai api (2024), https://platform.openai.com/docs/guides/prompt-engineering, [Online; accessed Sep. 2024]

- [18] Ozmen Garibay, O., Winslow, B., Andolina, S., Antona, M., Bodenschatz, A., Coursaris, C., Falco, G., Fiore, S.M., Garibay, I., Grieman, K., et al.: Six human-centered artificial intelligence grand challenges. International Journal of Human–Computer Interaction 39(3), 391–437 (2023)

- [19] Padovano, A., Cardamone, M.: Towards human-ai collaboration in the competency-based curriculum development process: The case of industrial engineering and management education. Computers and Education: Artificial Intelligence 7, 100256 (2024)

- [20] Shneiderman, B.: Direct manipulation: A step beyond programming languages. Computer 16(08), 57–69 (1983)

- [21] Tavakoli, M., Faraji, A., Molavi, M., T. Mol, S., Kismihók, G.: Hybrid human-ai curriculum development for personalised informal learning environments. In: LAK22: 12th International Learning Analytics and Knowledge Conference. pp. 563–569 (2022)

- [22] Tavakoli, M., Faraji, A., Vrolijk, J., Molavi, M.d., Mol, S.T., Kismihók, G.: An ai-based open recommender system for personalized labor market driven education. Advanced Engineering Informatics 52, 101508 (2022)

- [23] Wang, B., Li, Y., Lv, Z., Xia, H., Xu, Y., Sodhi, R.: Lave: Llm-powered agent assistance and language augmentation for video editing. In: Proceedings of the 29th International Conference on Intelligent User Interfaces. pp. 699–714 (2024)

- [24] Woolson, R.F.: Wilcoxon signed-rank test. Encyclopedia of Biostatistics 8 (2005)

- [25] Xu, W., Dainoff, M.J., Ge, L., Gao, Z.: Transitioning to human interaction with ai systems: New challenges and opportunities for hci professionals to enable human-centered ai. International Journal of Human–Computer Interaction 39(3), 494–518 (2023)